Why we're launching AI Career Pro

Training, tools and community for success as an AI Governance Pro. Learning to bridge the gap between theory and practice.

For the past year on this site, I’ve been documenting the day-to-day realities of high-integrity AI governance, sharing some practical guidance built from both my own experience and our work advising clients on practices for safe, secure and lawful AI.

I’ve shared policy templates that I know work in practice, mapped the regulatory landscape as it evolves into a unified control framework, and published research on what it takes to build meaningful human oversight. Mostly, I’ve tried to build bridges between worlds that at times struggle to speak the same language: engineers and lawyers, data scientists and auditors, coders and compliance, business leaders and governance professionals.

That bridge-building has been intentional from my very first article here when I wrote on the four disciplines of AI Governance - science, engineering, law and assurance. In that first article, I described the vital need for collaboration across those disciplines, because I believe the biggest challenge in AI governance is neither the pace of technology nor the complexity of regulations. It’s in translation between disciplines.

And that’s why we’ve also been building and will very soon launch AI Career Pro, an online program of comprehensive online training in the knowledge and practical skills of AI Governance across all four disciplines.

You can download the full skills map at grow.aicareer.pro/skillsmap

Silos and cross-purposes

Every article, template and framework I’ve shared since stems from witnessing the same frequent disconnect. Engineering and data science teams race ahead building AI systems, commonly unaware of emerging legal requirements until late in development, or blind to novel security vulnerabilities unique to machine learning. Meanwhile, legal and compliance professionals craft governance frameworks in isolation, interpreting laws, writing controls and setting documentation requirements that fundamentally don’t align with the reality of rapid, iterative model development and continuous deployment pipelines.

I’ve seen some absurd contradictions. Business leaders who urge employees to experiment with AI and embrace innovation, while their legal and compliance teams simultaneously warn those same employees never to use any AI tool that they haven’t explicitly approved (and they haven’t approved any yet!). Product teams told to move fast, build AI into their product features and release, while their compliance teams insist on traditional waterfall approval cycles and annual audits. Maybe that worked for legacy software, but it sure breaks under the iterative pace and scale of AI.

The gap between appearance and reality is wide. Some companies publish Responsible AI principles while their products exhibit clear harms (did someone say Meta), others commit and make safe AI core to their purpose1. Certification reports get issued declaring compliance while known safety issues persist in production systems.

The result is predictable. Technical teams accumulate risk without realising it, building models that are going to need significant rework to meet minimum regulatory requirements. Legal and governance teams generate documentation that sits unused, checkbox exercises that satisfy neither regulators nor engineers. Paperwork piles up while risk and technical debt compounds. Employees, caught between contradictory directives, either ignore policies entirely or become paralysed, unable to use even basic AI tools effectively.

This isn’t a failure of competence on any side. It’s a failure of translation. The data scientist doesn’t know what the lawyer needs to meet regulatory notification requirements. The lawyer doesn’t understand why requiring a two-week exercise to write a series of Impact Assessments is so impractical to an engineering team. The employee using ChatGPT to cope with their workload isn’t aware of the security and privacy risks of sharing client documents in a public chatbot. Each are no doubt doing excellent work in their domains, but that work isn’t connecting where it needs to.

From sharing to scaling

Through this blog, I’ve tried to address these translation failures one article at a time, and I’ve hugely enjoyed and valued the comments and feedback I received. I will certainly keep writing here. But there’s a limit to what blog posts, templates and tips can achieve. You can download my Controls Megamap, but unless you understand why certain controls matter for AI specifically, you’re following a spreadsheet without the depth of understanding to make the right trade-offs. You can download my AI Governance Policy templates or Control Selection Matrix but you need more to understand how to balance controls and select them proportional to the risks and relevant to your context. You can read about meaningful human oversight, but implementing it requires breadth of understanding across the technical constraints, the regulatory expectations, and the very unusual and often surprising behaviours of users and human reviewers.

I regularly hear from readers that my guidance addresses a gap in the availability of practical, actionable AI governance knowledge. I find that the landscape of AI governance education reveals a peculiar void. You’ll find countless basic introductions to AI ethics, long expositions on the intracies of the EU AI Act and theoretical discussions about fairness. You’ll find certifications that provide useful frameworks and regulatory knowledge. I’m frequently impressed by the authors of extensive policy analyses, whitepapers and deep research into AI safety techniques. Not quite so impressed by the vendor whitepapers that mask only thinly veiled sales pitches.

But the practical components that matter most in actual implementation? What needs to be built to implement safe, secure and lawful AI. I find these are so commonly absent.

Think about the business case for AI governance. Every governance pro needs to justify investment to leadership, yet you will struggle to find solid practical guidance on quantifying the return on governance investment, calculating the cost of non-compliance versus implementation, or demonstrating value beyond risk mitigation. I’ve watched talented professionals struggle to get basic resources approved because they argue that Responsible AI is simply a necessary good, without providing the hard numbers and the facts that translate governance needs into business language.

Or take algorithmic assessments. The EU AI Act requires them for high-risk systems. ISO standards reference them. But try to find detailed guidance on actually performing one. What metrics do you collect? How do you test for fairness when you have multiple protected attributes? What tools do you use? How do you document findings in a way that satisfies both regulators and engineers? The theory says “perform an algorithmic assessment.” The practice requires choosing between a number of different fairness metrics that often contradict each other, assembling scripts from open source libraries, then explaining to your legal team why the honest answer to a question like ‘Is our AI system fair?’ is: ‘It depends.’

An AI system inventory seems straightforward until you try to build one. They are the foundation of all AI governance work. But what counts as an AI system versus traditional software? How do you track models embedded three layers deep in vendor products or sharing datasets across multiple training pipelines? What is the real consequence if your vendor trains their models on your ‘anonymised’ data? How do you maintain inventory accuracy when models update continuously through automated retraining? Building an AI inventory is hard work, and yet there is very little practical guidance available on how to do it - that’s why I wrote a few articles on our methodology.

Real human oversight presents perhaps the starkest gap. Everyone agrees it’s essential. Regulations require it. But the practical questions go unanswered. How do you design oversight interfaces that present complex model decisions with the right information to inform a review? What’s the right balance between human review and automation when you’re processing thousands of decisions per second? How do you prevent automation bias while maintaining efficiency? How do you document human decisions in a way that demonstrates meaningful oversight rather than rubber-stamping?

I think these gaps exist because there’s too much of a disconnect between the people writing laws, standards and policies and those who actually build AI. I’ve come to the view that these aren’t knowledge gaps that another whitepaper, template or theoretical framework can fill. They’re capability gaps that require structured learning grounded in actual implementation, practical application with real constraints, and a community of practice where professionals can learn from each other’s successes and failures. They require instructors who’ve actually built these systems, made these trade-offs, and learned which theories survive contact with reality. And practitioners who don’t settle for mere theory or exam prep.

That’s the purpose at the core of AI Career Pro.

Teaching what we build, building what we teach

I’m fortunate to draw from two parallel streams of experience. The first comes from my years at Microsoft and Amazon, where I built governance mechanisms that had to work at global scale. At Microsoft, I led the deployment of multiple Azure cloud regions, from dirt to datacentre to security certification. At Amazon, I created their first Responsible AI Governance program that achieved ISO 42001 certification. That meant building every component from scratch: quantifying the business case for executives who wanted hard ROI numbers and explicit market needs, designing risk frameworks that engineering teams could actually use and integrate into their workflow, creating audit evidence that improved the lifecycle, satisfying both internal reviewers and external certifiers.

I learned what works by building it, talking with data scientist and engineers who rightly pushed back on every control that added friction, with lawyers who needed defensible documentation for every decision, with executives who demanded both innovation speed and regulatory compliance. The bridges I teach aren’t theoretical. They’re the same ones I built to get a Responsible AI program certified at one of the world’s largest tech companies.

The second stream is happening right now. We’re building a software platform for scalable, adaptive human oversight - using AI to help govern AI. This means every governance principle I teach faces immediate practical tests. Can it be implemented in code? Does it scale? Does it work sub-second decision latency? Can it simultaneously meet regulatory requirements in Europe and Australia? Does it provide genuine oversight or just compliance theatre?

We work through engineering and governance problems, then I share what we’ve learnt. Over the last few weeks for example, we’ve been focusing on how the metrics from evaluators can inform both human and automated judgement, and which algorithms we can best apply in different circumstances to learn the parameters of new guardrails. That work led me to write an article on cognitive calibration in human oversight, and it’s driving new features in our software. That problem and its solution now form a case study in our course on the complex dynamics of human reviewers who are there to provide oversight.

This creates a feedback loop that I don’t think you’ll find in traditional training. When I teach about building an AI inventory, it’s informed by the inventory system we’re building for our own platform, including all the odd exceptions we discover. When I cover risk assessment, I’m drawing from assessments we’re perform and the controls we’re implementing. And when I describe the complexities of detecting and handling harmful content, it’s because we’re having to build the same protections ourselves. The gap between teaching and doing is short.

I hope this dual role will always keep the training honest. I can’t teach shortcuts I wouldn’t take. I can’t recommend frameworks that don’t survive actual implementation. I can’t pretend governance is simpler than it really is, because tomorrow I have to make these same trade-offs in our own systems. I’m also banging against the same limitations you’ll face - such as finding tools and techniques that can be automated as code, not stagnant on the shelf in policy documents.

More details about our software development and the platform we’re launching will come in future posts and our excitement is building as that day approaches, but the key point is this: AI Career Pro isn’t built on theory or outdated case studies. It’s built on governance challenges we’re solving right now, knowledge and skills we’re building today, and implementations we’ll ship and be held accountable to tomorrow.

The professional foundation you already have

When I map the skills that translate into AI governance expertise, I consistently find core competencies that professionals bring from their existing roles. My background is as an engineer on the safety control systems of chemical plants. I learned to build software and infrastructure, then I worked to understand the complexities of laws, regulation and policy. And along the way I built the professional and leadership skills to drive change, lead teams and make ideas real. I consider myself a professional in AI Governance, but my foundations are in engineering, technology leadership, and regulation.

If you’re just starting or on the journey to becoming an AI Governance Pro, then I want to assure you that the distance between your current expertise and that required for proficiency in AI governance is smaller than you might think. What’s missing is the translation layer that helps you apply what you already know to this new domain.

Strategic communication for distilling complex issues. Stakeholder engagement for building alignment across disciplines. Risk and control design from years of managing operational challenges. Technical literacy from working with engineering teams. Regulatory interpretation from navigating compliance requirements. These aren’t skills you need to build from scratch if you’re coming into AI Governance. They’re capabilities you’ve probably developed over years that just need translation into the AI context.

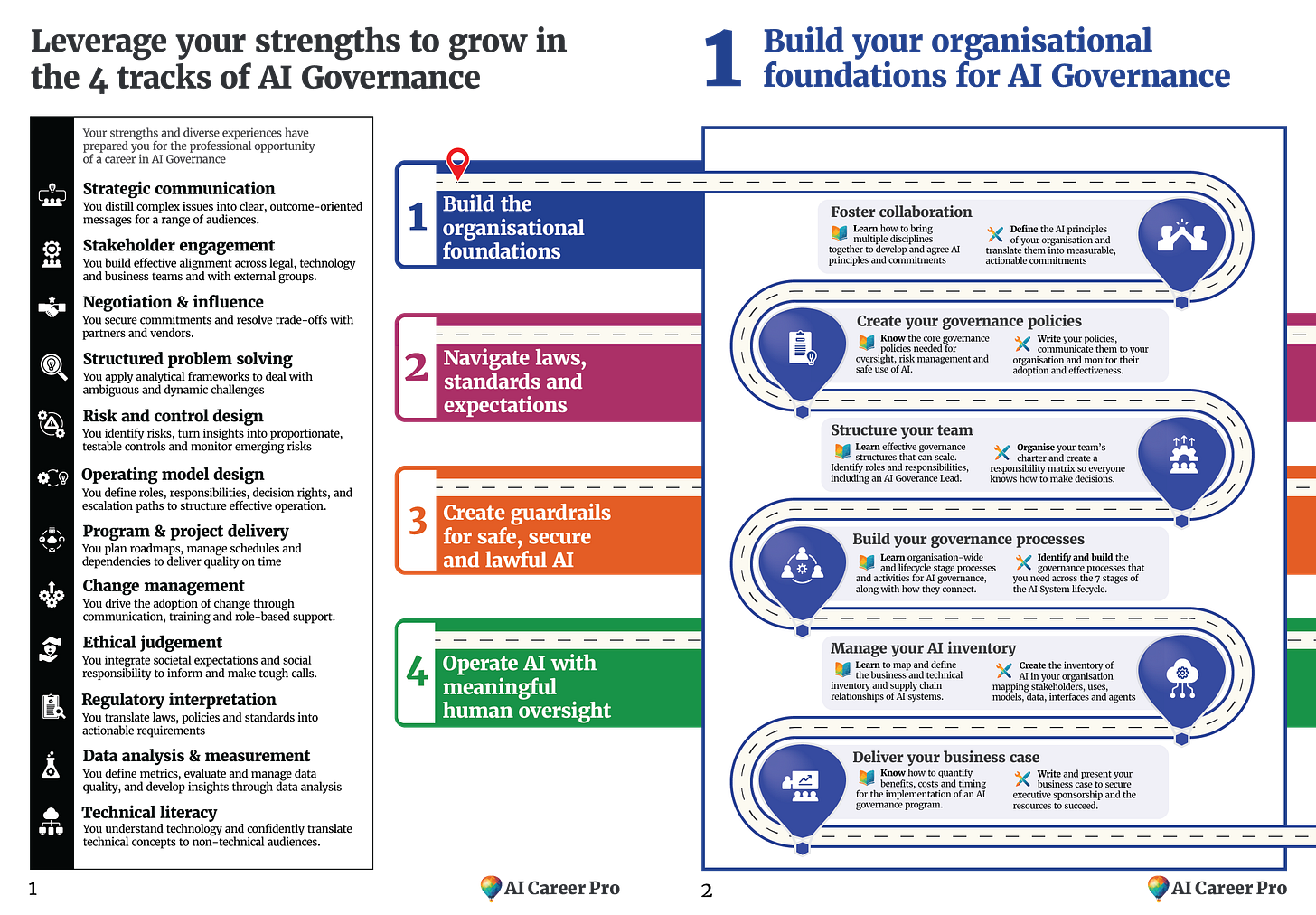

So I’ve designed AI Career Pro to organise learning around four tracks that reflect how governance work actually happens, and how you can build on the strengths you already have:

Building organisational foundations starts with the structures that make everything else possible. This is the practical work of creating governance structure that actually work, writing policies that teams will follow, building processes that integrate with existing workflows, and developing the business case that secures resources.

Navigating laws, standards, and expectations goes beyond listing requirements. We work through regulatory text, translate them into technical requirements, and workable mechanisms that satisfy both auditors and engineers. You’ll learn how to work most effectively with legal specialist, why certain interpretations matter, and how to navigate the gaps between different regulatory frameworks.

Creating guardrails for safe, secure and lawful AI focuses on controls that work in production. Not theoretical control frameworks, but actual mechanisms you can implement. This includes controls that can be automated so developers won’t bypass them (I can’t promise but they might actually thank you for them), and security measures that address AI-specific threats like model extraction and adversarial inputs. Importantly, you’ll learn practical ways to perform algorithmic assessments, and find it’s not as hard as you might think.

Operating AI with meaningful human oversight tackles the hardest challenge in AI governance. We explore what meaningful oversight actually means, how interpretability and explainability can be implemented and how to deal with the tradeoffs of human oversight. You’ll design oversight interfaces, build review processes that scale, learn to deal with incidents and guide safe adoption.

Each track builds on real implementation experience. The exercises come from actual governance challenges. The templates derive from working documents. The case studies document real successes and failures, including our own!

Why this moment matters

The EU AI Act has shifted from proposal to law with concrete compliance deadlines. Organizations worldwide are deploying AI systems that affect millions of users while their governance capabilities lag behind. The International Association of Privacy Professionals reports that demand for AI governance professionals far exceeds supply, with technical governance roles commanding median salaries exceeding $220,000.

But the opportunity goes beyond individual career advancement. The professionals developing AI governance expertise today aren’t just filling roles. They’re defining what those roles become. They’re writing the first playbooks, establishing the standards, creating the practices that will guide AI development for decades.

This is what it means to join a profession at its formation. Not when the textbooks are written and all the certifications are standardised, but when practitioners are still figuring out what works. When your experience and insights can shape not only your organisation’s approach but the entire field’s evolution.

The path forward

If you’ve been following this newsletter, implementing these frameworks in your organisation, and wrestling with these same translation challenges, you understand what’s at stake. You know that AI governance isn’t just about compliance checkboxes or risk mitigation. It’s about enabling your organisation to deploy AI systems that are simultaneously innovative and trustworthy.

Our first courses launch within a program designed for professionals who want to apply their existing professional expertise to AI governance. You don’t need a technical background, though technical professionals are welcome. You don’t need legal training, though legal professionals will find their skills valuable.

All you need is the professional foundations in any domain touched by governance, risk, compliance, or technology, the recognition that AI is transforming your field, and the determination to become an AI Governance Pro.

You can download the skills map, and subscribe for more information as we approach launch and begin delivering the program.

Just go to: grow.aicareer.pro/skillsmap

I will continue to share my writing here. It’s part of building and contributing to the public knowledge base this profession needs. But for those ready to go deeper, to understand not just what to implement but why and how to adapt it to your context, to join a community actively defining this profession while building your own expertise, AI Career Pro provides that structured path.

We’re building the bridges between disciplines that AI governance requires. More importantly, I hope we can help you build their own bridges, adapted to your organisation, your industry, and your unique challenges. Because ultimately, that’s how we create AI governance that actually works: not through top-down frameworks, but through practitioners who understand both the principles and the practical trade-offs required to implement them.

As a subscriber to Doing AI Goverance, my sincere thanks. Your reading, contributing and ongoing support has encouraged our momentum towards building AI Career Pro, and I look forward to welcoming you at our launch.

If you’ve read this far, then I’m sincerely grateful. If you’d like to stay across our launch progress and access a discount code for any course at launch, then please do register at: aicareer.pro

https://www.forbes.com/sites/douglaslaney/2025/08/17/alternate-approaches-to-ai-safeguards-meta-versus-anthropic/