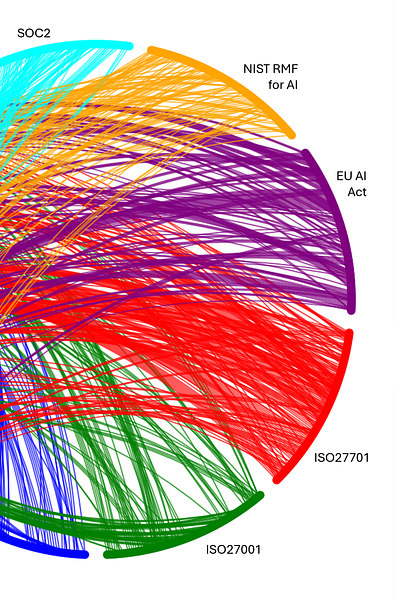

AI Governance Controls Mega-map

A crosswalk of master controls aligning ISO42001, ISO27001, ISO27701, the NIST Risk Management Framework, EU AI Act, and SOC2, for a real-world AI governance control framework.

Anyone who has ever tried to map multiple control frameworks, standards, and regulations into a single, unified control set knows one universal truth: it’s brutal. The process is tedious, frustrating, and often soul-crushing—especially for the poor junior auditor who just learned what “crosswalk” means (and now has nightmares about it).

But despite the pain, this work is essential. Organisations don’t have the luxury of choosing just one framework. Sure, if you only had to implement ISO 42001, life would be grand. But reality isn’t so kind, and most online courses don’t tell you about that. Instead, in a real AI governance program you have to juggle NIST, SOC 2, multiple ISO standards, GDPR, AI regulations, industry-specific mandates, and whatever new framework or law is looming on the horizon, all on top of your existing legacy control frameworks1. Each time a new requirement emerges, you either bolt it onto your existing controls (making them bloated and unmanageable) or scramble to redesign everything.

So instead, good practice is to map these frameworks in the form of a master control set and crosswalk, customise it for your organisation, and then implement it as the backbone of your governance and controls framework. This isn’t just an academic exercise. Once in place, the master control set becomes the touchstone for all future governance, risk and compliance work. Every new regulation, every audit, every security assessment—they all map back to this foundation, giving you consistency, clarity, and sustainability.

And if you don’t do this? Well, things get ugly—fast:

You get crushed under the weight of competing frameworks. Each new one introduces slight variations on the same themes, forcing redundant or conflicting controls that make life miserable for implementation, compliance, and audit teams.

Your controls become inflexible and inadequate. A patchwork approach creates gaps, inefficiencies, and blind spots that weaken control effectiveness. In frustration, engineering teams go their own way building a patchwork of ad-hoc rules and practices.

You create shadow control frameworks. You might call them something like Common Control Frameworks, but they are mappings that exist on paper only as abstract facades, disconnected from the real practices and controls that engineering and science teams apply - only useful for sustaining a pretence of good governance.

You enter “checkbox compliance” mode. Instead of real risk management, you’re just scrambling to pass audits—ticking boxes rather than ensuring meaningful governance, building the minimal regulatory response mechanisms with opaque, patchy disclosures.

You start moving towards “malicious compliance”, regarding every new law, standard or framework, even valuable ones that could accelerate innovation, as a headache and obstacle to be avoided or minimised.

And that’s a dangerous place to be. Yes, this is hard work. It’s tempting to avoid—to just tackle frameworks one at a time and hope it all works out later. But if you put in the effort now, your future self will thank you. No more scrambling, no more duplication, no more last-minute panic when a new framework drops. Just a strong, adaptable foundation that lets you manage compliance on your terms.

Now I really believe this matters, and I’d like to help reduce the burden. I’ve had the privilege over many years of building these kinds of frameworks to handle a vast number of global frameworks, laws, standards and policies. And I’ve had the opportunity to learn how to most effectively perform this kind of analysis and create control frameworks that work in practice.

So, I wanted to do the hard part for you as a starting point for your own governance framework. Over the next few articles, I’ll go through a comprehensive mapping of controls - a mega-map - from six different standards and laws into a common master control set. To be as comprehensive as possible, I chose ISO42001, ISO27001 and ISO42001 as a base for security, privacy and responsible AI, along with the NIST Risk Management Framework for AI, the EU AI Act, and finally the SOC2 Trust Services Criteria. These are the six that I would suggest any company building AI solutions should focus on first.

Now, even with some help from ChatGPT O3-mini-high proposing mappings (often mistakenly), and Claude helping to double-check the semantics of each control one-by-one (while hallucinating frequently), the process still required reading, checking, and re-checking over 1,000 pages of regulatory text. It took a few weeks to grind through, dissecting every requirement, resolving contradictions, and stitching together a coherent, sustainable master control set. Is it perfect? No. Is it the only way to do it? Definitely not. But it’s a starting point—a structured, rationalised foundation that can help you navigate the ever-evolving compliance landscape without losing your sanity.

(Now, I’m sure many of you will say - but there are commercial tools for this! Yes, there are. I’ve used a variety of those tools, even built one to perform much of this work automatically, a tool still used by one of the largest companies in the world. I even tried using the most current models from OpenAI, Anthropic and Google. But I still believe there is no better way than the manual approach I’ll describe.)

Scope and framework selection

To recap the set of sources to create this master control set, I set out to balance AI-specific governance, general security and privacy foundations, requirements in law and industry-prevalent best practices:

ISO 42001 – The foundation for AI Management, providing a structured approach to AI governance.2

ISO 27001 & ISO 27701 – Because you can’t achieve AI governance without the foundations of security and privacy3 4.

SOC 2 – Widely adopted, especially in the US, making it a de facto standard expectation for organisations building AI solutions in enterprise markets5.

NIST Risk Management Framework6 – Offers depth and rigour in a risk management process, and despite the changes in the US, likely to remain very relevant.

EU AI Act7 – The first AI law, setting a precedent that other countries. Its risk-based approach to AI governance is already influencing global regulatory trends.

Combined these cover all the bases of governance, security, privacy, responsible AI, audit and regulatory operations. I also made some assumptions about the type of organisation this master control set is for, just to narrow from edge cases:

A provider and deployer of AI solutions with a team of more than 10 people operating with European and US markets

Intending to operate at least one high-risk AI system, as defined by the EU AI Act8

Acting as both a Processor and Controller of personal data

Not in the national security/defence sector or law enforcement

Not building general-purpose foundational models (e.g., this is not for OpenAI, Anthropic - they have some additional requirements under the EU AI Act that are not generally applicable).

Expecting to pilot deploy in the real world, not in a regulatory sandbox, which I think is a far more realistic assumption.

One obvious caveat, but I’ll say it anyway - these are my opinions and are not provided as legal advice. You should perform your own verification and engage expert advice as may be necessary.

So, take a deep breath. I’ve at least done the groundwork to get you started. Now, it’s time to make compliance manageable — so that you don’t just survive the next audit, but actually build AI management systems that are sustainable, even enjoyable to work within.

The AI Governance ‘Megamap’

I want to walk you through a little of the process, so you understand how to take it on for your own purposes. Designing a master control framework begins with a crucial first step - one that's part art, part science. We need to identify the major thematic domains that will structure our entire approach to AI governance.

Here’s my process, and I’ll forewarn you that this is distinctly manual and analogue. First, I print out every standard, control framework and law - just over 1000 pages for this exercise. I physically cut every page into individual controls - discrete requirements that must be met. Then in the largest empty room I can find, I get ready to spread them out: thousands of paper scraps, each containing a specific control, clause or requirement.

Domain Grouping

Then begins the work of pattern matching. You take each scrap and start looking for its natural companions - other controls that speak to the same functional areas and requirements, even if they use different language or come from different frameworks. It's like card sorting, where you're not just grouping similar items, but discovering the underlying structure of the different domains of AI governance itself.

At first, the piles form organically. You might start with obvious groupings - everything related to security in one pile, privacy requirements in another. But as you work through more controls, the patterns become more nuanced. You'll find controls that seem to bridge multiple domains, forcing you to reconsider your initial choices. Sometimes what started as a single pile needs to be split as you recognise distinct sub-themes emerging. Other times, what you thought were separate concerns actually reflect the same underlying principle, and piles merge together.

Frequently, something seems to fit in multiple domains - in which case, you have to choose one, but record a reference to the secondary domains that it might also relate to. Although there are tools to help with this and ways to use AI to accelerate the process - and I’ve previously built both - I still find that this physical sorting process reveals insights that might be missed in a purely digital analysis. You can literally see how different frameworks approach similar concerns from different angles. A security requirement in ISO 27001 might resonate with an AI-specific control in ISO 42001, while the EU AI Act adds another layer of consideration to the same basic principle. The physical proximity of these paper scraps helps illuminate these connections, making it easier to identify the natural "families" of controls that will form your master framework.

Organisational Overlay

Now comes a second stage of rationalisation: applying an organisational intuition on how groups of controls work in real practice. While theoretical frameworks might suggest one way of grouping controls, the reality of how organisations operate demands a more practical approach. It's like understanding that a city isn't just organised by geography, but by how people actually live and work within it.

You have to think about who will ultimately own and implement these requirements. A control that requires technical implementation of model monitoring belongs naturally with other engineering-owned controls, even if it technically touches on privacy or security concerns. Similarly, controls around regulatory reporting and compliance documentation have a natural home with legal and compliance teams, regardless of their technical subject matter.

This organisational alignment becomes particularly crucial in AI governance, where the lines between technical and operational controls often blur. Take model explainability requirements - while they have technical components that engineers must implement, they also have documentation and communication aspects that legal teams need to oversee.

You will find groupings that align both thematically and organisationally. Security controls, for instance, naturally cluster together both in terms of their technical requirements and in terms of who typically owns them within an organisation. In other cases, you will find yourself mapping controls to multiple groups so that those connections are not lost, even if only one team holds the primary ownership and accountability.

This organisational intuition comes from years of seeing how governance frameworks succeed or fail in practice, understanding that the best theoretical structure means nothing if it creates organisational friction or unclear ownership. I try to build these practical considerations into our control groupings from the start, so that we end up with a framework that's not just comprehensive, but actually usable in the real world.

Identifying Control Sets

Now as a final step, we work inside each category to identify rational master controls. Let's look at how this works in practice by examining controls and requirements related to Incident Management. When I first gathered all the incident-related controls from across our frameworks, I could see dozens of individual requirements - everything from ISO 42001's specifications for AI incident response to the EU AI Act's mandatory reporting requirements for high-risk systems. But simply lumping all incident-related controls together wouldn't be enough to create a usable framework.

Looking deeper at these controls, you can start to see natural subdivisions - distinct aspects of incident management that form coherent units. First, there's everything related to detecting and responding to incidents. This isn't just about noticing when something goes wrong; it's about having the right monitoring systems, response procedures, and expertise to handle AI-specific incidents. These controls naturally group together because they're all about the immediate, operational aspects of incident handling.

Then we see another clear cluster of controls focused on reporting and notification. These requirements share a common thread - they're all about communicating incidents to the right stakeholders at the right time. Whether it's notifying affected individuals about biased decisions or reporting serious incidents to regulatory authorities, these controls form a distinct category with its own specific requirements and timelines.

Finally, we find a set of controls centred on analysis and continuous improvement - everything related to learning from incidents and preventing their recurrence. These controls are about the longer-term aspects of incident management: conducting root cause analysis, updating procedures based on lessons learned, and verifying that improvements actually work.

So our original pile of incident-related controls naturally separates into three master controls: IM-1 (Incident Detection and Response), IM-2 (Incident Reporting and Notification), and IM-3 (Incident Analysis and Improvement). Each represents a distinct aspect of incident management while working together to create a comprehensive approach. This structure makes sense not just on paper, but in practice - these divisions align with how organisations typically handle incidents, making it easier to implement and maintain the controls.

The Results

What emerges from this process are the 12 core thematic domains and 44 master controls, each of which we'll explore in detail over the coming articles. Here’s what that looks like when every requirement is mapped as appropriate to at least one (and usually more) of these master controls. Try not to be overwhelmed by the complexity of the illustration - as we get into the detailed sections, we’ll focus on specific parts of this map and it will make much more sense. You can also download the image in parts below to work through it in higher resolution.

What’s important to understand is that we now have a manageable set of 12 domains, containing a total of 44 master controls that together provide complete coverage of the requirements from over a thousand pages of controls and clauses. This distillation is crucial.

Now there is a final step here, re-examining the balance and practicality of these controls. Each category and control needs to strike a delicate balance. Too broad, and the domains and controls become meaningless catch-alls that don't provide useful guidance. Too narrow, and we end up with a fragmented framework that's difficult to implement and maintain. The groupings I've identified represent what I think of as "natural joints" in AI governance - the logical break points where one set of concerns ends and another begins, while still maintaining clear connections between related areas.

What makes these groupings by domain particularly powerful is their ability to abstract controls to a useful degree while maintaining comprehensive coverage. Security controls, for instance, encompass everything from traditional information security to AI-specific protections against model tampering. Privacy spans both conventional data protection and the unique challenges of protecting insights derived through machine learning. Each category is broad enough to be meaningful yet specific enough to be actionable.

These domains reflect the full scope of how organisations must approach AI governance, from strategic leadership through technical implementation to external engagement. They align naturally with how teams operate and how responsibilities flow through an organisation, making our framework both comprehensive and practically useful.

The Twelve Domains for AI Governance

Let’s walk through at a very high level each of these domains. In subsequent articles, I’ll dive much deeper into each one.

Governance and Leadership (GL) sets the tone from the top, establishing the policies, resources, and strategic direction that enable safe, responsible AI development and operation. The master controls in this domain (GL-1 through GL-3) set up the organisational foundation for AI governance through three essential pillars: executive commitment that demonstrates active engagement in AI risk decisions, clearly defined roles and responsibilities backed by appropriate resources, and strategic alignment that ensures AI development serves both business goals and ethical principles. These three controls create the leadership infrastructure that makes all other governance possible.

This connects directly to Risk Management (RM), where organisations systematically identify, assess, and mitigate the complex risks that AI systems can pose to both the organisation and society. It is one of our most comprehensive domains, with four distinct controls (RM-1 through RM-4) that guide organisations from framework establishment through risk identification, treatment, and ongoing monitoring. These controls work together from the high-level governance structures that guide risk management down to the practical mechanisms for detecting and responding to emerging threats.

Regulatory Operations (RO) is a domain that spans four key controls (RO-1 through RO-4) that help organisations navigate the complex landscape of AI regulation. From establishing comprehensive regulatory frameworks to managing transparency requirements, regulatory registration, inspection and post-market monitoring, these controls ensure organisations can demonstrate compliance to legal requirements.

System, Data and Model Lifecycle (LC) comprises five controls (LC-1 through LC-5) for the practical development and deployment of AI systems, ensuring quality and responsibility at every stage from data collection through system retirement. Starting with data quality and governance, moving through system development and resource management, and culminating in comprehensive technical documentation and change management, these controls are all about embedding quality, safety and responsibility at every stage of an AI system's life.

Security forms a significant domain with controls (SE-1 through SE-6) extending from high-level governance through specific technical controls. This progression - from security architecture through identity management and personnel, software security, data protection, network defence, and physical safeguards - creates multiple layers of protection that work together to secure AI systems from diverse threats.

Privacy protection is built on four robust controls (PR-1 through PR-4) that ensure privacy considerations are embedded throughout AI development and operation. From privacy-by-design principles through personal data management, compliance monitoring, and privacy-enhancing technologies, these set of controls work together to protect both individual privacy and organisational reputation. Any organisation that needs to address GDPR requirements (so basically everyone) will have important controls in this domain to implement and monitor.

Safe and Responsible AI encompasses five controls (RS-1 through RS-5) that ensure AI systems benefit society while minimising potential harms. These controls progress from human oversight through safety, robustness, explainability, and fairness - creating a comprehensive framework for responsible AI development and deployment.

Assurance and Audit covers three levels of validation (AA-1 through AA-3) that help organisations verify their controls are working as intended. From internal assessment through independent certification and safety validation, together they create a verification framework that builds a trust (but verify) mindset into AI systems.

Operational Monitoring consists of three interconnected controls (OM-1 through OM-3) for continuous oversight of AI systems in production. From performance monitoring through event logging and continuous improvement, these controls help organisations maintain and enhance their AI systems over time.

Incident Management's three controls (IM-1 through IM-3) are all about bringing together one approach to handling AI-related incidents. From detection and response through reporting and analysis, these controls are to ensure organisations can effectively manage and learn from incidents when they occur.

Third Party and Supply Chain management has two essential controls (TP-1 and TP-2) that help organisations manage the complex network of vendors and partners involved in AI development and deployment. They aim for clear accountability and comprehensive risk management across the entire AI supply chain.

Transparency and Communication rounds out our framework with two fundamental controls (CO-1 and CO-2) that cover effective explanation of AI systems to stakeholders, including internal users. These controls help organisations maintain trust through clear communication about system capabilities, limitations, and impacts, while making sure meaningful stakeholder engagement can shape system development and operation.

Together, these twelve domains form an interlocking framework that covers all six of the sources, and likely to support the vast majority of any new control sources, frameworks, policies or laws.

You can download the mapping diagrams below and in the coming articles, I’ll walk through each domain, the master controls within them, and their mapping to each of the six source frameworks. (And after I’ve written all those, and validated everything, I’ll share the complete and detailed map for you to use - just subscribe to be informed when it’s ready).

Thanks for reading, and subscribing - I hope you find this work and the subsequent articles coming to be useful as you build meaningful, high-integrity AI governance in your own organisation.

There are some crosswalks available, but they’re generally limited to only map from a single framework to another single framework. NIST maintain a useful list here: https://airc.nist.gov/airmf-resources/crosswalks/

https://www.iso.org/standard/81230.html

https://www.iso.org/standard/71670.html

https://www.iso.org/standard/27001

https://www.aicpa-cima.com/topic/audit-assurance/audit-and-assurance-greater-than-soc-2

https://www.nist.gov/itl/ai-risk-management-framework

https://artificialintelligenceact.eu/the-act/

This is a tour de force, @James Kavanagh. I'm looking forward to your further work on this. Some questions:

1. Does one or more of these standards cover ethical treatment of data workers who do enrichment (labeling or annotation)? (Perhaps under Governance & Leadership?)

2. Do the 5 controls under Safe and Responsible AI cover proactive attention to identifying and mitigating biases?

3. Where do consent, credit, and compensation (3Cs) to creators and environmental resource efficiency fit in?

4. You mentioned "Not building general-purpose foundational models (e.g., this is not for OpenAI, Anthropic - they have some additional requirements under the EU AI Act that are not generally applicable)." Everything in the map (and more) still does apply to the foundational model companies, right?

5. Do you know of any person or organization who is, or will be, tracking which companies have certified their compliance with the standards you include here? (e.g. Anthropic getting ISO 42001 certification recently)

Thanks!

This is one of the most insightful and practical works I have read for navigating the multitude of frameworks, policies, and standards. I can only imagine the grueling and painstaking process you must have gone through to distill and simplify this into 12 domains.

I look forward to exploring your body of knowledge and perhaps considering its adoption where it makes sense for our organization.

Thank you for your effort.