Designing for Adaptive Oversight of AI

Applying the lessons of safety engineering and control theory to the complex and dynamic problems of meaningful human oversight.

In my last article we looked at the wreckage of Deepwater Horizon’s blown well, and the tragedy of the Cruise robotaxi incident, then teased out the common thread: that every disaster was a control problem that slipped its feedback leash. How small issues cascade into calamity when there are inadequate safety constraints and feedback mechanisms. In this article, I want to zoom in on the mechanics of staying in control and maintaining meaningful oversight despite the complex dynamics of AI systems.

I’ll unpack the building blocks of a multi-level safety architecture with local controllers, feed-forward predictors, and slow-loop feedback governance layers. My aim here is to show how the mindset of safety engineering can bring proven, useful techniques that are transferrable to the real challenges of AI Safety and AI Governance. I’ll go through ow safety constraints, fast and slow feedback can be integrated at multiple layers, blending human and machine adaptive controllers for meaningful oversight and control.

Fair warning - this topic is again more for an engineering audience (adaptive control theory is not for the feint-hearted), but I’ve tried to keep it conceptual and accessible even if you’re not technical. As always, your questions and counter-examples are welcome. Please do drop them in the comments.

What is an Adaptive Controller?

AI systems are adaptive by nature. Many machine learning models will continue to learn and update (online learning), or they’ll be periodically retrained as new data comes in. Even if the model parameters don’t change, the environment does. The data feeding into the model can drift in content or distribution (what we often call data drift or concept drift). So the behaviour of an AI system can change over time, sometimes in subtle ways. This poses a challenge: the various controllers around the AI, whether that’s a human decision-maker, a monitoring system, or a governance body, has to update their own expectations and mental models to keep in sync with the AI. If they don’t, you get misalignment and potentially unsafe outcomes. Governance would be easy if technology just froze and nothing changed, but of course, that would be utterly boring!

So there are a lot of moving parts that try to make that alignment happen, but this is a difficult topic to consider in abstraction. So try to think of this scenario: Imagine we deploy an AI system in a hospital to assist with prediction of sepsis diagnosis. The AI takes patient data and outputs a risk score and maybe a suggested diagnosis, and it has within it some controller to ensure the accuracy of those outputs. Another controller is the clinician who reviews the suggestion and decides on treatment. The clinician is effectively a higher-level controller who can accept or override the AI’s advice. There might also be a model monitoring system running in the background. This acts as an automated controller that looks at the AI’s outputs statistically, checking for anomalies or drift – for example, “is the model suddenly flagging way more sepsis cases today than usual?”. And even above that, you could have a hospital oversight committee or an AI governance board (yet another layer of control) that periodically reviews how the AI tool is performing and whether policies or settings need adjustment. These kind of controller hierarchies exist in almost every aspect of modern life, whether AI is involved or not, especially when consequential decisions are being made that could cause loss or harm.

Each controller has some form of ‘process model’ to represent it’s understanding of the world, including what values it measures and how it should take action. A computer implements the process model in software, the clinician holds their process model in heuristics and knowledge, an oversight committee may have a process model embodied in written policy, rules and procedures.

Now consider how this multi-controller setup behaves over time. Suppose a new variant of an infection appears that wasn’t present in the AI’s training data. The AI’s performance changes. Maybe it becomes less accurate for certain patients. The clinician on the ground might not immediately realise this – their mental model might still be “this AI is generally reliable for sepsis.” If the clinician continues to trust the AI the same way as before, we have a problem: the AI’s process model (its internal parameters) no longer matches reality, and the clinician’s process model (their belief about the AI’s accuracy) is now out-of-date too. Similarly, the automated monitor might start seeing more anomalies (it needs to detect that drift), and the oversight committee might need to update policy (maybe decide to retrain the model or issue guidance to clinicians). Each of these controllers has to adapt. If any one of them fails to adjust their process model, we get a misalignment. Perhaps the clinician over-trusts a flag-happy AI and follows a false alarm recommendation, or conversely, starts distrusting it too much and ignores it even when it’s correct. Either scenario can lead to unsafe outcomes.

This challenge of keeping the controllers in sync with a changing AI is essentially a problem of feedback and adaptation at multiple levels. Each controller needs feedback to update its model. The AI model itself gets feedback when outcomes are measured (e.g., was the diagnosis correct?), and ideally that feeds into model updates. The clinician gets feedback in the sense of seeing patient outcomes and perhaps periodic performance reports of the AI (like “overall, the AI’s precision dropped last month”). The model monitor gets raw data feedback on distributions. The oversight board gets incident reports or metrics from the field. If the feedback loops are timely and informative, each controller can recalibrate. But that takes work - and has to be designed and sustained for it to work well.

This isn’t an entirely hypothetical case. A real proof-point is the drift documented in hospitals running the Epic Sepsis Model (ESM). When Michigan Medicine externally validated the vendor’s algorithm, they found that—contrary to its original specs—the live model caught only 33 % of sepsis cases, missed two-thirds, and triggered roughly eight false alarms for every true positive. Its area-under-curve had sagged to 0.63, far below the 0.8-plus Epic had reported1. Worse, once COVID-19 hit and the inpatient case-mix shifted, the proportion of patients firing ESM alerts more than doubled from 9 % to 21 % even as overall incidence actually fell, swamping clinicians with noise and eroding trust.

Here the controllers fell out of sync on every level: the model’s parameters no longer matched reality; bedside staff, assuming the tool was still reliable, either over-trusted noise or ignored real signals; and some institutions had no automated drift monitor or governance board tracking the decay.

In my last article I introduced the concepts of System Theoretic Accident Model and Processes (STAMP)2. And he result here was the same pattern STAMP predicts: unsafe control actions (false negatives and false positives) flowing from a stale process model and slow feedback. The solution is to implement adaptive AI controllers that are capable of learning and adaptation. An adaptive AI controller (whether in software or humans) needs mechanisms for robust data-drift detection, rapid retraining workflows, and clear escalation paths so that technical monitors, human experts, and oversight committees update their mental models in lock-step with the algorithm.

However, designing these feedback loops is tricky. In fast-changing AI deployments, latency in feedback is a big issue. If it takes too long for the oversight layer to learn that something’s changed (say a monthly meeting that reviews AI incidents), then for a whole month the system could be operating suboptimally or unsafely. Similarly, if the frontline human doesn’t get immediate feedback about when the AI is uncertain or when it’s faced with a novel scenario, they can’t adjust their reliance on it in the moment.

There’s also the issue of edge cases and unknown unknowns. AI can hit edge cases that none of the controllers predicted. A well-documented illustration comes from social-media “algospeak,” where users deliberately swap forbidden words for harmless-sounding code to slip past automated filters. On TikTok, terms such as “unalive” (suicide), “spicy cough” (Covid-19) came into use. In late-2024, “cute winter boots” and “Dior bags” were adopted so the platform’s classifiers would treat the videos as innocuous fashion chatter rather than sensitive or policy-violating topics3. A parallel cat-and-mouse game plays out around disordered-eating content: after TikTok and Instagram blocked obvious pro-anorexia hashtags, communities simply re-encoded them, changing one letter or adding an emoji, so unhealthy material continued circulating under the algorithm’s radar4. Each time the filter is out-witted, the moderators’ and engineer’s process models of what the system must catch become obsolete, forcing them to hunt for the new code words, update rules, and retrain models. That’s a textbook edge-case cycle in STAMP terms.

So, how do we tackle this challenge of adaptive controllers? Is it even possible to design for these kinds of rapid shifts and complex, and unforeseeable edge cases at the pace of innovation in AI?

The essential task is to embed rapid-feedback loops and adaptive mechanisms within our mechanisms of AI safety and AI governance, assigning humans clear oversight responsibilities. These mechanisms fall into two broad classes: proactive adaptation and reactive adaptation.

Proactive Adaptation: Staying Ahead of Changes

Proactive adaptation means anticipating potential changes or failures and designing the system to handle them in advance. Several strategies can fall in this bucket:

Scenario-based testing and simulation: One method we used to keep chemical plants safe involved running simulations of upset conditions, asking questions such as “What happens if the coolant flow suddenly drops by half?” and then observing how the control system responded. Scenario-based testing serves the same purpose for AI. We assemble a broad set of situations, including foreseeable edge cases, and probe the system’s behaviour before it goes live. Autonomous-vehicle teams follow this practice at scale: they feed the model countless simulated driving scenes such as night rain, a pedestrian darting into the road, or an unexpected lane closure, to verify that it chooses a safe action. This approach reveals how the AI behaves when the world misbehaves and helps engineers map its failure modes. A medical-diagnosis model, for example, might struggle with rare groups like paediatric patients; discovering in simulation signals the need for extra safeguards or more representative training data.

Monitoring for uncertainty and drift: A proactive AI deployment is never a fire-and-forget arrangement; it depends on constant vigilance over the data flowing into the model rather than on after-the-fact checks of its outputs. Drift-detection algorithms compare live input distributions with those from training and raise alerts when they diverge, while feature-level monitors track shifts in ranges, formats, or missing-value rates. These input-side gauges serve the same purpose as pressure and temperature sensors on the feed into a chemical reactor, providing early warnings before problems propagate. When they signal anomaly or drift, the system should respond by filtering or correcting the incoming data, retraining the model, or switching to a safe fallback mode.

Human-in-the-Loop (HITL) Design: This aspect of proactive adaptation is critical. AI systems must be designed so that humans participate at key decision points, especially when the stakes are high or trust in the AI is incomplete. The aim is to pair the speed and data-processing power of AI with the judgment and contextual insight of people. In a human-in-the-loop approach the system may output recommendations that a person must approve before any action is taken. Another pattern lets the AI manage routine cases while automatically passing unusual ones to a person; for instance, an automated loan tool can flag an atypical application and route it to a loan officer. Human involvement forms a real-time feedback loop that catches errors and helps the organisation learn while maintaining accountability. In medicine, decision-support tools are therefore configured to advise rather than replace clinicians, who retain the final say and can override the AI. When the system meets a novel situation, the clinician’s broader understanding can avert failure. Human-in-the-loop design accepts that AI is fallible and keeps human oversight as an essential safety net. There’s a problem though: humans are fallible, they have bad days and develop cognitive biases. The idea that we just escalate all the hard cases or judgement calls to a human is usually wrong (more on this later).

Feedforward Control – Predictive Adjustments: Drawing on control theory, we can embed feedforward mechanisms into AI operations. Feedforward control measures a disturbance before it reaches the system and adjusts parameters in advance. In an AI context this means monitoring upstream signals (beyond just data inputs) that point to an impending shift. Suppose an e-commerce recommendation engine is about to face Black Friday traffic with unusual shopping patterns and bot activity; the team can adjust thresholds or switch to a special-event model because past data shows this disturbance is coming. Likewise, if a new regulation is expected to change user behaviour, engineers can retrain or recalibrate the model ahead of time. Using domain knowledge to anticipate change lets us compensate before it happens rather than scrambling afterward.

These proactive measures demand both effort and foresight. They need to be engineered. Yet they reward that investment by preventing incidents or at least making the system resilient to surprises. They operate much like the safety margin in engineering design, where planners allow for more than the ideal scenario.

Proactive methods themselves also need disciplined management. A human-in-the-loop arrangement, for instance, can create fresh risks: people may grow complacent when the system appears reliable, or they may feel overwhelmed if alerts arrive too often. Healthcare deployments have shown that an excess of false alarms quickly breeds alert fatigue5. So engineers have to balance automation with human attention, perhaps by tuning the model to request assistance only when truly necessary rather than at every minor uncertainty. It’s also necessary to ensure that the human overseers retain enough skill to understand and act when necessary, so sometimes an AI system may need to bring work to a human that it actually could do itself, only in order to sustain skill in that human.

Reactive Adaptation: Safeguards, Overrides, and Learning from Incidents

No matter how good our proactive measures are, reality has a way of surprising us. That’s why we also need reactive safety measures that kick in during or after an unforeseen problem to minimise harm and update the system.

Runtime Safeguards and Fail-safes: In the chemical plant, if conditions deteriorated beyond control, physical relief valves and emergency shutdown systems took over as our last line of defense. AI systems need comparable protections at runtime. A basic example is an override or kill switch that lets a human operator, or an automated monitor, stop the system when it behaves dangerously or malfunctions. The switch might be a literal big-red button that cuts power to a robot, or a digital process that monitors the model’s actions and blocks any move that violates policy. A content platform, for instance, can disable a recommendation engine the moment it detects a surge of user reports or disallowed keywords. The aim is to limit harm quickly. These safety circuits stay deliberately simple so they remain dependable, acting as a backup controller that does not run the show but can bring it to a halt.

I think it helps to separate guardrails from barriers. Guardrails are constraints that keep the system operating within safe bounds; they guide behaviour without stopping the workflow. Barriers are hard stops such as kill switches that prevent further action once a threshold is crossed. Clear policies should specify both, ensuring the AI stays on course under normal conditions and giving operators an unambiguous way to shut it down when conditions become unsafe.

Graceful Degradation Modes: I believe another key principle is to design systems that degrade gracefully when they meet stress or abnormal conditions. Picture a self-driving car whose vision sensors lose confidence during heavy rain; it could shift to a safe mode, slow down, and pull over to await human assistance. This response is reactive because it follows detection of a problem. In a different setting, an airline might switch from an automated scheduling tool to manual control if the software begins causing major disruptions. Power grids offer a similar pattern: when an automated subsystem fails, operators isolate the faulty segment and keep the remaining network running in a reduced, but safe, configuration.

Human Override and Intervention: Highly automated systems must still give people the authority to intervene. Pilots can disconnect the autopilot, industrial technicians carry emergency stop pendants, and AI software should expose a similar override. If a trading algorithm begins to act erratically, operators must be able to halt activity or adjust parameters. Building for human intervention requires a dedicated interface and training so that people know how and when to use it. The system must also be transparent enough for an operator to recognise when the override is needed and to understand why.

Designing meaningful oversight is, to me, the most fascinating challenge in the whole field of AI Safety and AI Goverance. We cannot assume that a supervisor will instinctively know what to do in the event of an emergency. The AI must present information in a form that is clear and actionable, and operators must practise intervention skills so they do not fade over time. We must also account for human biases, such as excessive trust when the machine usually performs well or unwarranted doubt when it occasionally asks for help. People grow tired and stressed, and sometimes have off days, sometimes they’re angry and distracted with their partner or co-worker, so the system should support sound decision making under those conditions. At times the machine must instruct the person, and at other times the person must guide the machine. We are only beginning to understand how to enable controlled, collaborative decision making between humans and intelligent systems.

Post-Incident Analysis: When an incident or near miss occurs, the worst response is to shrug and move on. So many disasters have prior near-miss events that if acted upon could have avoided the calamity. Aviation and other high-risk fields conduct thorough investigations that aim to learn, not to blame, and AI Safety and AI Governance field needs the same discipline. A method such as CAST—Causal Analysis based on STAMP—can guide the process6. After an AI-related event, we examine it through the control-system lens: Did an unsafe control action occur? Which controller, human or algorithm, issued it or failed to prevent it? Was that controller’s model of the situation flawed? Were constraints or feedback channels missing? This kind of analysis often uncovers deeper causes. A content-moderation model might have failed because no procedure existed to update its blocklist with new hateful slang, prompting a new policy to gather weekly input from front-line moderators. A medical tool could misfire if clinicians do not realise where the model lacks expertise, so a hospital might change training and add a prominent warning reading “Not validated for patients under eighteen” whenever the system is opened for a child.

Continuous Improvement and Updates: Reactive adaptation feeds directly into continuous improvement. When a failure mode is discovered, the team updates the model or its surrounding process to close the gap. This may involve gathering new training data that covers the scenario, adding a rule in the monitoring layer, or redesigning part of the workflow. Any change must be documented and communicated so that every controller, human or machine, refreshes its understanding. For example, after an incident the oversight board can revise the guidelines, train staff on the update, and deploy a software patch. In this way the system learns from its own experience and makes a repeat of the same incident far less likely. Over time these lessons strengthen the AI, giving it defences against an ever wider range of challenges.

“Designers have been found to have (1) difficulty in assessing the likelihood of rare events, (2) a bias against considering side effects, (3) a tendency to overlook contingencies, (4) a propensity to control complexity by concentrating only on a few aspects of the system, and (5) a limited capacity to comprehend complex relationships.

AI and computers will not save us here. They are much worse that both human operators and designers at these tasks”

(Leveson - Introduction to System Safety Engineering)

Multi-Level Feedback Loops: Connecting Operations to Governance

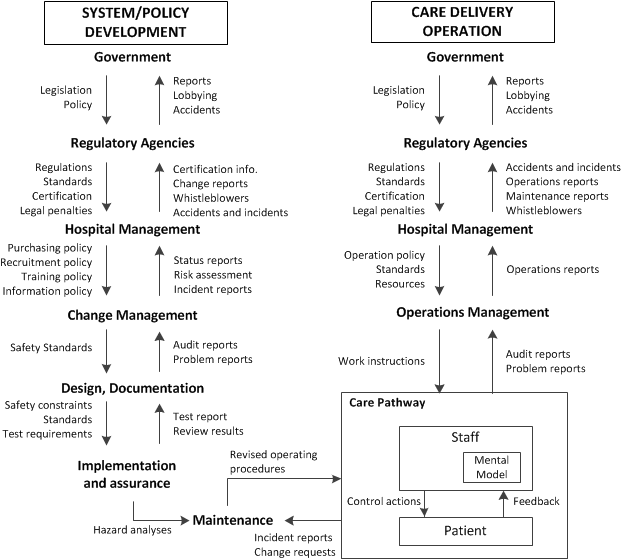

Stepping back, you can see the pattern: effective feedback loops must operate at every level of an AI system. Complex systems theory, including approaches such as STAMP, result in a hierarchical control structure in which each layer sets goals or constraints for the layer below and receives status information in return. The chain begins with technical components and rises through successive layers to senior management, creating nested control loops that span from raw sensor signals up to organisational and regulatory oversight, as shown below in this diagram for healthcare.7

In an AI system we can similarly trace a clear hierarchy of control and feedback. At the base sits the model and its software, the AI System itself. One layer up an operator or monitoring service watches the system’s behaviour and results, adjusting parameters or stepping in when needed. Those actions flow downward while metrics and alerts flow upward. Above the operators stand management or governance teams that set policies for acceptable use—rules like “do not target ads at category X” or “send cases with confidence below Y to a human reviewer.” They receive reports, incident logs, and audit findings that reveal whether the rules work or new risks are surfacing. At the highest tier external regulators and the wider public impose laws and standards and in turn receive disclosures and observe public incidents. Every layer applies constraints to the one below and depends on steady feedback to judge performance.

Multi-level feedback turns the entire structure into a learning organism. Engineering teams refine the model based on low-level data; governance teams refine policy based on operational experience. If in our sepsis-prediction scenario, frontline clinicians repeatedly override an AI recommendation for patients over eighty, that signal should reach the hospital’s oversight committee. The committee might stop the model for that age group or trigger a retraining project. The flow also works downward: a new privacy requirement from governance teams could compel engineers to purge or anonymise certain data, and the technical teams then report on feasibility and impact.

Effective loops rely on clear communication. Dashboards, regular review meetings, and incident-sharing channels ensure that people closest to the action can raise concerns and that leadership can enforce timely changes. STAMP highlights that accidents occur when constraints are weak, unenforced, or when feedback is missing. By weaving tight feedback loops through every tier we gain defence-in-depth: if a technical monitor misses an odd output pattern, user complaints may alert the operations team, which can escalate the issue for a governance fix.

These loops preserve safety over time. Deliberate design of mechanisms for continuous, tiered feedback institutionalises adaptation, allowing the system to renew its fit with goals instead of decaying into risk.

Engineering Safety and Governance: A Unified Approach for Safe, Responsible AI

Stepping back, I hope I’ve come across clear that engineering-led AI Safety methods and broader AI Governance are not opposing approaches, but complementary pieces of the puzzle. In fact, neither alone is sufficient for safe, responsible AI. Even if we perfect the technical aspects of safety, including thorough monitoring, reliable fail-safes, and comprehensive analyses, we can still miss social and ethical expectations when governance is lacking. A decision-making AI for insurance approvals might meet every engineering benchmark, yet without oversight it could continue denying coverage to disadvantaged applicants. The system would remain technically stable while being socially unacceptable. Conversely, if we focus just on governance – policies, compliance, risk registers – without digging into the technical and operational realities, we get paper safety. That’s when everything looks good on a checklist, but in the real world the system can still fail because the checklist didn’t capture a complex interaction or the people implementing it weren’t given the right tools. Even worse, it is paper safety on a system that may have been designed but never built as the real system has diverged so far from it’s conception.

My experience in safety-critical domains has taught me that you need both top-down governance and bottom-up engineering. Governance sets the goals and constraints, defining what the system must do, how much risk is acceptable, and who holds accountability. It establishes what “safe” means and draws the boundaries. Engineering then supplies the mechanisms and processes that meet those goals, translating safety into design, code, and day-to-day operations. In STAMP terms governance occupies the higher layers of the control hierarchy, issuing constraints, while engineering carries out the control actions and builds the feedback loops that uphold them. Together they form a single, continuous control system.

Keeping AI systems safe and responsible demands a unified strategy that blends governance with engineering. In practice this begins with interdisciplinary teams. From the first day of a project, AI developers should collaborate with safety engineers, risk managers, ethicists, lawyers, and domain experts. Risk assessment must go further than listing abstract hazards; it should trace how each failure could occur through a clear hazard analysis and then link that scenario to a specific control. Some controls will be technical, such as an anomaly-detection module, while others will be governance measures, such as a well-rehearsed incident-response plan.

One of my recent articles was on building an AI risk control matrix8 – basically a table mapping major AI risks to preventative controls, detective controls, and responsive controls, borrowing from the common approach to cybersecurity controls. In other articles, I described pulling controls from technical standards and from governance frameworks9. The synergy is key; the risk treaments as engineered controls make the governance policies actionable, and the governance policies ensure we’re aiming at the right target.

I would be remiss not to add one final point on culture, a topic worthy of a long article on its own. In safety engineering, there is an ethos of vigilance and learning. You assume things can always go wrong and you strive to continually improve. In some fast-moving AI development cultures, that mindset is not yet ingrained. There’s more of a ship-it-now, fix-it-later mentality. To build truly safe AI, I believe we need to infuse some more of that safety culture. Move fast, and not break things. Do AI Governance and AI Safety not to slow down innovation, but to deliver it safely and responsibly.

So that’s it for now from me on applying an engineering mindset to AI safety. We’ve gone from disaster lessons to feedback loops, controller process models, and continuous adaptation. Now you know how I think about AI Safety and in particular the connection points between AI Safety and AI Governance. I believe that safety engineering can help us move from a naive view of “just make the model accurate and all will be well” to a more realistic view that “we are operating a complex adaptive system that we must actively control and supervise.” By combining this with high-integrity governance and clear constraints, ethical principles and accountability structures, we cover all bases. We create AI systems that are not only well-behaved in the lab, but that remain safe and trustworthy when deployed in the wild, under changing conditions, and under watchful, meaningful human oversight.

As engineers, we know the work is not complete when the confusion matrix looks perfect in the lab or even when the volume of users scales up and up; it is complete only when the whole system of sensors, dashboards, escalation paths, human judgment, and organisational incentives rides out real-world shocks without disruption. If you’ve ever run heavy equipment, you know the thrill of seeing a refinery hold pressure after a compressor trip or a data centre’s voltage staying level when a generator cuts in. We can’t eliminate every failure, but a safety-engineering mindset makes failures rare, contained, and informative.

I believe lasting trust in AI comes not from claims of perfection or box-ticking compliance, but from proving that we can detect, diagnose, and correct the moment the system falters. The formula is actually pretty straightforward even if it’s hard: relentless engineering vigilance coupled with high-integrity governance yields a system that keeps people safe even when the unexpected occurs.

Move fast, don’t break things.

My next article will resume normal programming. Back to the challenges of describing model governance practices and creating a data & model governance lifecycle policy.

If you’ve got this far, thank you so much. I write to think and consider it a wonderful bonus if anyone reads it!

https://jamanetwork.com/journals/jamainternalmedicine/fullarticle/2781307

https://www.nytimes.com/2022/11/19/style/tiktok-avoid-moderators-words.html

https://www.thetimes.co.uk/article/coded-hashtags-promote-eating-disorder-content-on-social-media-vqd6g8lvq

https://hackernoon.com/ai-in-healthcare-worksbut-only-when-humans-stay-in-the-loop

https://psas.scripts.mit.edu/home/get_file4.php?name=CAST_handbook.pdf

https://systemsthinkinglab.wordpress.com/stamp/

“Top-down governance and bottom-up engineering” — this is the way. 👏👏👏 Great article.