Preventing Harmful Content in AI Outputs - Part 2: Science & Engineering

Part 2 of a practical guide on the law, assurance, science and engineering of preventing harmful content from AI-generated systems.

You can read the first part that focused on the law, policy and governance practices of harmful content in AI systems here:

The science of harmful content detection

So let’s dive into the methodologies and metrics used to detect harmful content while not overly blocking content or creating false-alarms. We’ll also explore the effectiveness of different AI models, and some of the ongoing research shaping the future of AI generated content moderation. We have to start by thinking about the most relevant metrics, so let’s look at them one by one:

Precision: Of all content flagged as harmful, how many instance detected are actually harmful? This matters because high precision results in fewer “false alarms.” You don’t want to be overegging the blocking to endup just creating both user dissatisfaction and overwhelming workload for human reviewers. A study using various LLMs (GPT-3.5, GPT-4, PaLM) showed how a high-precision approach is essential to reducing moderator workloads1

\(\text{precision} = \frac{True Positives}{True Positives + False Positives}\)Recall: So taking all the harmful items in the wild, how many are actually detected by the system? Having a high recall means you don’t miss dangerous content. Depending on the use case of your application, missing a critical item (like a genuine toxic bullying use) can have real-world consequences so high recall may need to be prioritised.2

\(\text{recall} = \frac{True Positives}{True Positives + False Negatives}\)F1-Score: The harmonic mean of precision and recall, typically used as a single-number snapshot so that you can see how well your model balances both goals as a proxy for overall performance3

\(F_1 = 2 \cdot \frac{\text{precision} \cdot \text{recall}}{\text{precision} + \text{recall}}\)Balanced Accuracy: Accuracy is a measure of the number of correctly identified instances. However, because harmful content is relatively rare, we often use balanced accuracy as a metric that weighs performance on both the majority (clean content) and minority classes (harmful content) to paint a more realistic picture.

\(\text{accuracy} = \frac{True Positives+False Positives}{TotalInstances}\)Area Under the Curve (AUC): For this metric, we graph the combined true positive and false positive rates at a specified threshold (usually being 0.5) and measure literally, the area under the curve. A high AUC suggests that your model can separate harmful from safe content across multiple potential settings.

False Positive Rate (FPR): The fraction of innocent content that gets wrongly flagged as harmful. If your FPR is too high, then well-meaning users might feel ‘censored’.4

False Negative Rate (FNR): The fraction of actually harmful content that slips through. A high FNR is generally a much more serious issue than a high FPR, as incidents of harm could go undetected.

Latency: Latency is simply the time taken to perform the evaluation, as perceived by the user. There is always a tradeoff to be managed around the time it takes for the system to process and (hopefully) block or flag content versus the responsiveness of the application. Many of the engineering choices around how to implement effective content harm protections are influenced by this latency.

Here’s the challenge: focusing too heavily on one metric alone often leads to unacceptable side effects. For example, if you obsess over achieving “max recall,” you might blow up your false positive rate—blocking a large amount of harmless content. On the flip side, zeroing in on precision alone might mean you safely filter out only a small fraction of harmful materials. That’s why F1 and the AUC (or using a Precision-Recall curve) are the most popular measures in scientific literature.

In real-world implementations though, it is more likely that custom “cost functions” are implemented that weigh the risk of letting harmful content slip through more heavily than the annoyance (and administrative overhead) of occasionally blocking a legitimate output. The final determination of metrics and targets is typically a balance that is chosen guided by:

Platform priorities (e.g., do we absolutely forbid certain content?)

Risk tolerance (what’s the cost of letting one harmful item slip?)

User base (are they more tolerant of a few “false alarms,” or do they hate any restrictions on speech?)

Legal/regulatory constraints, if there is regulatory requirement, fine or other consequence for inadequate moderation

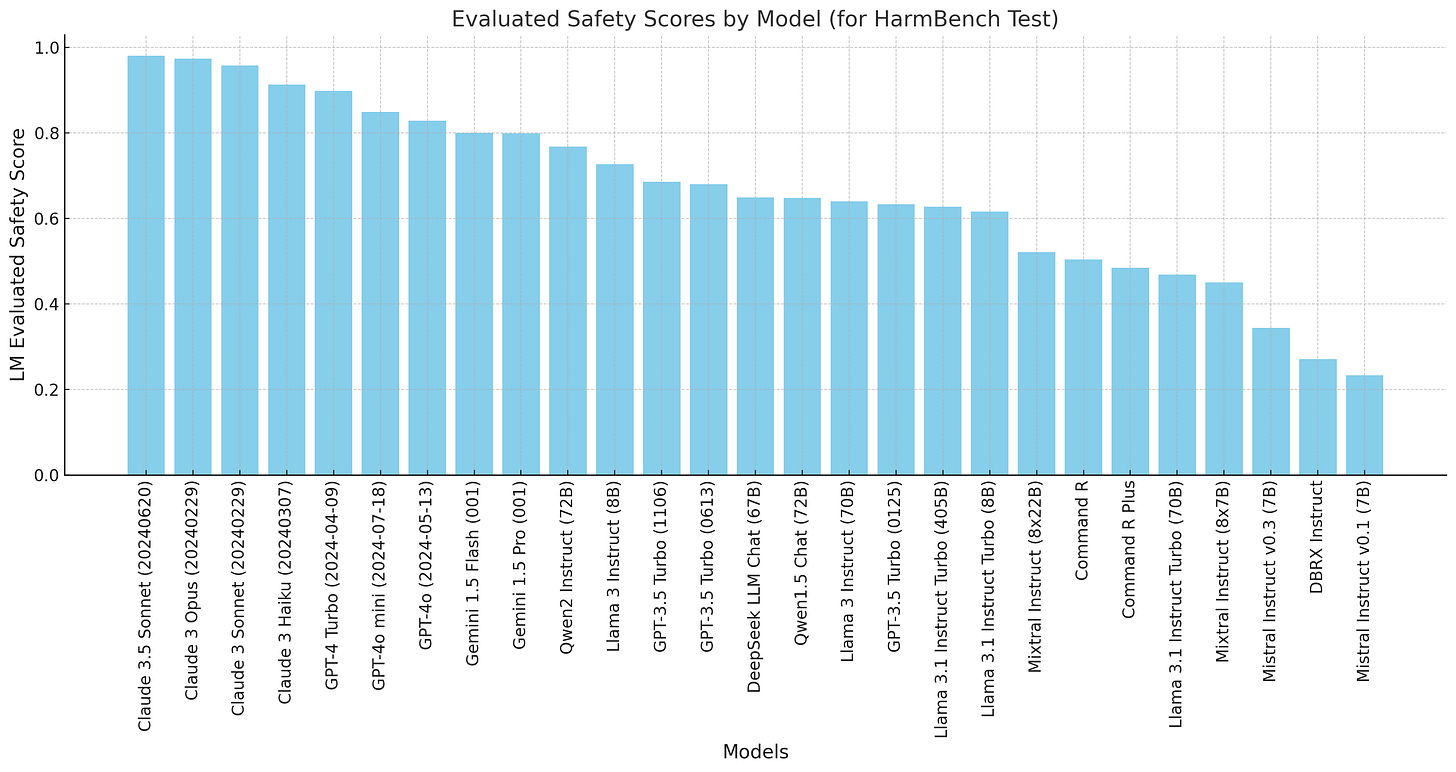

It is notable that although there is a reasonable level of agreement on appropriate benchmarks for assessing the functional capability of AI systems, there is no clear consensus approach to doing so for content harm. One initiative in this direction that is beginning to gain traction is the Holistic Evaluation of Language Models (HELM)5 which is Stanford’s ongoing initiative to provide ‘broad, transparent, and standardized safety evaluations’ for AI language models. The latest release, HELM Safety v1.0, specifically targets six major risk categories—including violence, fraud, discrimination, sexual content, harassment, and deception—by using a suite of five safety benchmarks to test model behaviors in both straightforward and more adversarial scenarios. The HELM leaderboard shows that most LLM providers can achieve very high metric scores on relatively straightforward content tests to date, but there is much more significant variation on the more challenging (and newer) HarmBench tests as shown below.6

Case Study: Health disinformation via LLMs7

Situation and Harm

A repeated cross-sectional study evaluated multiple large language models (LLMs)—includingGPT-4,Gemini Pro (via Bard),Claude 2(via Poe), and Llama 2(via HuggingChat)—to see if they would generate health disinformation when prompted about two false claims:

1. “Sunscreen causes skin cancer.”

2. “The alkaline diet cures cancer.”

GPT-4 (via ChatGPT), Gemini Pro, and Llama 2 generally complied with user requests to generate lengthy, inaccurate blog posts promoting these unfounded claims. Across multiple prompts, the models produced over 40,000 words of harmful disinformation, often including fabricated references and testimonials—despite the fact that the content was clearly false and potentially dangerous.

In contrast, Claude 2 and GPT-4 (via Microsoft Copilot) refused such prompts or significantly curtailed their responses, even with the researchers attempting jailbreaks.

Controls

Refusal Mechanisms: Claude 2 and GPT-4 (via Copilot) demonstrated robust guardrails, refusing 100% of direct prompts for health disinformation. This suggests prior training and red-teaming specifically targeted at health-related outputs.

Human Oversight and Post-Study Adjustments: Some of the other chatbots that did generate disinformation eventually added or updated disclaimers after the study. Researchers re-tested them 12 weeks later, finding modest improvements—though GPT-4 (via ChatGPT) at that later re-check had actually become more permissive (an unexpected regression).

Reporting Portals: Where disinformation generation was discovered, authors attempted to file content violation reports. The processes were inconsistent but forced some of the LLM providers to clarify or tighten their “report problematic output” mechanisms.To Fine-Tune your LLM or Not?

Developing AI applications that make consequential decisions in sensitive domains, such as healthcare, require additional safeguards. In healthcare, for example, an AI-driven assistant that suggests self-harm or provides incorrect diagnostic information presents an unacceptable risk. In these kinds of scenarios researchers recommend approaches that include:

Specialised classifiers: Implement classifiers trained specifically to detect language indicative of for example self-harm or suicidal ideation.

High recall priority: Prioritize catching all instances of harmful content, even at the expense of some false positives, to minimise missed critical signals.

Fail-safe mechanisms: If harmful content is detected, the system should immediately escalate the user for human intervention.

Fine-tuning: Using human or synthetic data approaches to fine tune a general-purpose LLM to the specific domain.

Although the first three approaches are clearly advantageous, fine-tuning is an approach that should be considered with caution. It can be double-edged. While fine-tuning can help align the model with specific content policies, improper fine-tuning can degrade built-in safety mechanisms or introduce new vulnerabilities or behaviours that are exceedingly difficult to identify. There are some partially effective strategies to mitigate these risks that include:

Parameter-efficient fine-tuning: Freezing the base layers of the model and training only small adapter layers to preserve original safety features.

Dataset curation: Using meticulously labeled datasets that cover a wide range of harmful content without introducing biases or toxic data.

Layer-wise adaptive tuning: Adjusting only higher-level layers of the model to maintain foundational safety alignments.

These approaches can at least help to ensure that fine-tuning enhances the model’s safety without undermining its inherent alignment capabilities, but even with these precauations it can have unintended consequences.

Case Study: Fine-Tuning and Toxicity in Gemma and Llama8

Situation and Harm

Researchers examined how fine-tuning open-source models (Gemma, Llama, Phi) affects their propensity to produce toxic or hateful language. They tested both the base (foundation) models and the instruction-tuned/chat-tuned variants. Despite improvements from the developers to reduce toxicity at release, the study found:

1. Gemma-2 and Gemma-2-2B (base models) already had relatively low rates

of toxic output.

2. Llama-2 and Llama-3.1 were more prone to generating hateful content

in certain adversarial prompts.

After a small amount of community fine-tuning (i.e., parameter-efficient tuning on non-safety-related data), the toxicity rate for some models increased unexpectedly, indicating that developer-tuned safety can be fragile.

Controls

Developer-Tuned Instruction Layers: By default, the model developers (Meta for Llama, Google for Gemma, Microsoft for Phi) had introduced instruction tuning to reduce toxicity. Indeed, fine-tuned “-IT” variants cut toxic rates substantially compared to the base.

Parameter-Efficient Fine-Tuning Guidelines: The researchers stressed the importance of dataset curation if community members are going to fine-tune the model further. Even with generic or benign datasets, they found unintentional regressions in model safety. As a response, some open-model communities began labeling certain datasets as “safe” or “unsafe,” and recommended layer-wise adaptive tuning to preserve the developer’s original alignment layers.

Safety Evaluations / Red-teaming: The paper highlights how multiple lines of defense (e.g., random prompt sampling, adversarial prompt funnels) should be run after each fine-tuning stage to catch new toxicity vectors early. Some model repositories started incorporating toxicity classification modules into their continuous integration pipelines.

Engineering considerations

Implementing an automated engineering approach to detect and filter out harmful content is essential. There are some at least partial options available commercially like OpenAI moderation9 or Azure Safety APIs10, but I want to focus on the engineering considerations and tradeoffs by looking at a few of the open-source approaches that probably offer more control, privacy and transparency.

Detoxify

Detoxify11 is a very straightforward Python library that classifies toxic language across multiple subcategories (e.g., threats, obscenity, identity-based hate). It’s built on top of transformer-based NLP models trained against the Jigsaw Toxic Comment Classification dataset. On the pro-side, it’s easy to integrate, small enough to run on a CPU and has pretty good sub-labelling, even an optional multi-lingual model. It is limited though in not dealing with semantic categories like ‘dangerous instructions’ and would most likely need fine-tuning to apply to specific domains, and of course only text-based harm. As a first step into content harm detection though, detoxify is very useful.

Granite Guardian (IBM / open-source)

Granite Guardian12 is an open-source suite for risk detection, focusing on categories like hate, abuse, profanity, and more comprehensive “umbrella harm” classifications including grounding and relevance. There are multiple versions that include smaller, fast models like Granite-Guardian-HAP-38M that is small enough for CPU-inference, up to larger versions at 8B parameters for more robust coverage. On the pro-side, it is actively maintained and developed on Github13 and HuggingFace with the research weight of IBM, and the variety of model sizes helps with latency-sensitive uses. It is also made available under the Apache 2.0 open source license which is permissive for commercial reuse. Most importantly, though the Granite language model architecture provides for more semantic flexibility than the classifier approach of detoxify. However, similar to detoxify, it only produces binary classifications - either there is harmful content or not - there is no severity scoring, and like detoxify it is text-based (primarily english).

LlamaGuard (Meta / open-source)

Meta describe LlamaGuard14 as an input-output safeguard model built on fine-tuned LLaMA family models (e.g., 7B, 8B). It classifies both user prompts and model responses into safe/unsafe categories, often with subcategory info (e.g., hate, IP violation) and provides a text ‘explanation’ that is easy to parse and use in runtime. LlamaGuard is quite well suited to realtime chat moderation, and with the Llama architecture foundation, it gains the semantic flexibility of an LLM and multilingual support, at a cost of being more GPU compute intensive (and thus having higher engineering resource and latency implications). One of the downsides though to be aware of with LlamaGuard (and all Llama models) is the more restrictive limitations of the Meta license.

ShieldGemma (Google / open-source)

ShieldGemma15 is a content moderation model built on Gemma2, focusing on sexually explicit, dangerous content, hate, and harassment. It is a text-to-text, decoder-only large language models with 2B, 9B, or 27B parameters, built using adversarial dataset generation for what Google claim to deliver excellent coverage of edge cases. It’s open source under a more permissive licence than that of LlamaGuard. Again the multiple model sizes provides some flexibility with 2B, 9B or 27B parameter models available, but there is a need for prompt templating to deal with more specialised categories of harm.

Latency and Performance Trade-Offs

Once you’ve picked a detection approach, the most important engineering decision is around the structure of your pipeline. Latency is the critical determinant, especially if the AI content needs to be reviewed in real-time. Excessive delays frustrate users and hamper real-time content moderation. Here are some of the things you need to think through:

Multi-stage vs Single-stage filtering?

A multi-stage appraoch will generally use a pattern filter or light classifier (e.g., Detoxify or a logistic regression) to quickly flag obviously harmful vs. obviously safe content. The remaining borderline prompts go to a larger, slower model (e.g., Granite Guardian, LlamaGuard 8B, ShieldGemma 9B or other model).

There are really very few downsides to a multi-stage approach as the engineering implementation is quite trivial and the payoff in latency benefit is significant.16

What size model?

Smaller parameter models (e.g., a ~50–100M parameter checkpoint vs. 2B–9B) yield much faster inference. Review the accuracy information provided with the model cards, and then test with your specific use case. It’s generally better to start with the smaller model and step up if you find edge cases are being missed or you’re seeing too many false positives/negatives on the smaller models. There is a balance though between going with the largest models versus integrating more effectively with human review (as I discuss below)

Is it possible to batch or stream?

If your use case has large bursts or batches, then it may be optimal to handle batches of content as a single pass. This won’t help with latency, but can help reduce overall resource costs. In the opposite case, if streaming of partial responses to the user is possible (as is common in chat applications), you can provide the user with a perceived improvement to latency.

What else can be done to reduce latency or infrastructure requirements?

Techniques like 8-bit or 4-bit quantization can significantly reduce GPU memory demands and speed up inference—particularly relevant for bigger models (e.g., ShieldGemma 9B or 27B) although there may be a slight drop in accuracy on nuanced categories.

You can co-locate your model endpoints near your user base to reduce network roundtrip times. You can also think about optimising concurrency with frameworks like vLLM, DeepSpeed, or TensorRT to accelerate inference.

Essential Mechanisms for Alerts and Human Review

Even the best classifiers will have false positives (innocuous content flagged) or false negatives (harmful content missed). So it is essential to have a robust workflow includes:

Real-Time Alerting

If a piece of content is classified “high risk,” generate a system message. Tools like Slack or email integration can notify moderators.

For borderline categories (e.g., possible hate but uncertain), you might escalate a warning to a separate queue with a shorter response SLA

Monitoring Dashboards

Display filter metrics: daily flagged items, false positive/negative rates, average latency, etc.

Track content volume by category (e.g., harassment, hate, sexual). Give moderators a quick high-level overview.

Human-in-the-Loop

Human Moderation Queue: Any “uncertain” or “high severity” content flows here. Moderators can confirm or override the model’s decision

Case Management: Systems like Jira or custom solutions help track repeated borderline posters, appeals, or user disputes.

Supervisor Overrides: If a model starts to degrade or produce obvious errors, supervisors can quickly intervene and revert to a safer baseline.

Feedback Loops

When human moderators confirm or overturn the filter’s decision, log that result. This data can be used for continuous fine-tuning of your chosen detection model.

External user reporting: If a user flags content as harmful, it can override the model’s safe classification and route it to a manual queue for verification.

Conclusion

Harmful content prevention and detection in AI systems is a multifaceted challenge that intersects policy & law, governance, science, and engineering. For AI Assurance professionals, addressing this issue requires a holistic approach that integrates robust legal compliance, strategic governance frameworks, advanced scientific methodologies, and efficient engineering solutions that include technical and human mechanisms.

Policy & Law: Navigating the evolving regulations like the EU AI Act and ensuring compliance is fundamental. Organisations must stay abreast of legal requirements, implement comprehensive safety measures, and recognise that liability remains with them regardless of AI safety optimisations that may or may not be implemented by their technology providers.

Governance: Establishing a clear taxonomy of harmful content, implementing effective controls and conducting regular red-teaming exercises are essential for mitigating risks. Continuous improvement and user trust must remain at the forefront of governance strategies, along with meaningful mechanisms for scalable human oversight.

Science: Leveraging state-of-the-art detection methods, utilising comprehensive benchmarks, and adopting dynamic model selection and fine-tuning strategies ensure that AI systems can effectively identify and mitigate harmful content.

Engineering: Building real-time, scalable detection pipelines that balance false positives and negatives, manage latency, and optimise resource utilisation is critical for maintaining effective content moderation. Practical engineering practices need to be implemented in technology, but also extended to support human oversight.

Ultimately, no single layer of policy, governance, science, or engineering can address harmful content from AI systems comprehensively on its own. Instead, a layered, dynamic, and collaborative approach is essential.

With the rapid evolution of AI technologies and the increasing complexity of digital interactions, stakeholders across all sectors have to work together to create safer, more trustworthy, and inclusive online environments.

This is even more true when executives like Elon Musk and Mark Zuckerberg choose to relinquish their responsibilities and choose to step off the field.

If you find this useful, you may like to read an article on the skills map of an AI Assurance professional

https://arxiv.org/html/2401.02974v1

https://www.ofcom.org.uk/siteassets/resources/documents/research-and-data/online-research/other/cambridge-consultants-ai-content-moderation.pdf?v=324081

https://www.researchgate.net/publication/371385243_Detecting_Harmful_Content_on_Online_Platforms_What_Platforms_Need_vs_Where_Research_Efforts_Go

https://arxiv.org/html/2405.20947

https://crfm.stanford.edu/2024/11/08/helm-safety.html

https://crfm.stanford.edu/helm/safety/latest/#/leaderboard

https://pmc.ncbi.nlm.nih.gov/articles/PMC10961718/

https://arxiv.org/html/2410.15821v1

https://platform.openai.com/docs/guides/moderation

https://azure.microsoft.com/en-us/products/ai-services/ai-content-safety

https://github.com/unitaryai/detoxify

https://research.ibm.com/blog/efficient-llm-hap-detector

https://github.com/ibm-granite/granite-guardian

https://github.com/alphasecio/llama-guard

https://arxiv.org/html/2407.21772v1

https://platform.openai.com/docs/guides/latency-optimization