Read the new ISO 42005 AI Impact Assessment Standard. Skip the paperwork.

Resist the urge to build new bureaucracy around AI impact assessments. Instead, integrate lightweight checks into the workflows you already have.

As the ink dries on ISO 42005 (the new international standard for AI system impact assessments) you might feel an urge to spin up fresh compliance machinery. It has been a long time coming, but was published in final form yesterday.1 A common reaction to new standards is to treat them as mandates for new forms, new reports, new committees, and new bureaucracy. ISO 42005 is no different. But if you actually want to build safe, responsible AI, then resist that temptation. Don’t do it.

I believe the value of standards like ISO 42005 lies not in feeding a paperwork factory, but in improving how AI systems are conceived, built, and deployed in practice. There are good insights within ISO 42005, and you should take these standards as guidance, but don't misread their intent. Unfortunately, ISO 42005 is a standard that's very easy to misread and send you in the wrong direction: down a path towards bureaucratic paperwork that makes no meaningful difference to the safety or responsibility of your AI systems, and might even do more damage than good. The goal should be integrating impact assessment into existing risk and governance workflows, not layering on burdensome new ones. I'm sure that was the intent, and it's even stated as such in the standard, but it's still easy to do the opposite.

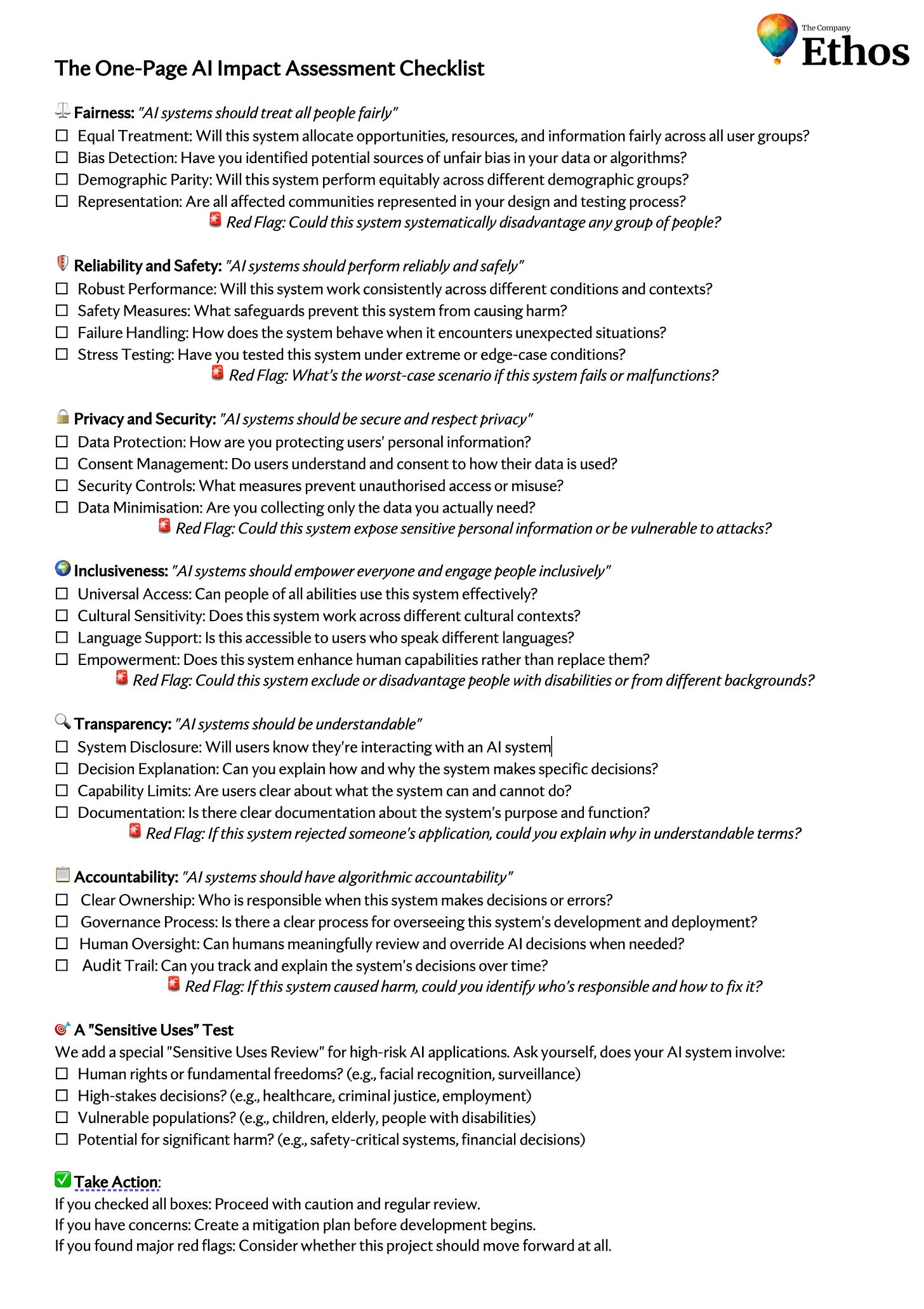

My suggestion: read the standard, internalise it, then set it to one side. You might also want to rip out Appendix E and burn it (we already have enough dust-collecting paperwork without adding more to the pile). Instead, you might want to try a simpler, more effective approach that I know works: a one-page checklist.

New Standard => New Process (Don’t Do It)

It's easy to see why a new standard like ISO 42005 triggers calls for new processes and compliance infrastructure. After all, its intent is to provide guidance on how and when to perform AI system impact assessments, and it even offers a template in Annex E for documenting them. ISO 42001 (the standard for AI Management Systems) is fast becoming a market expectation and has requirements to implement System Impact Assessments. So you'd be forgiven for thinking you need to crack open that template from ISO 42005 Annex E and start completing it for every AI system you have.

But before you do, let's take a look at that template. What a template it is! Six pages long before you've written a single word. It asks for over 80 pieces of open-ended information: identifying and describing in detail all datasets used and their characteristics, the details of all algorithms and models, all intended uses with detailed descriptions and possible misuses, all features, all dependencies, everything about the deployment environment, performance evaluation, all stakeholders and their roles. By the time you finish reading the questions, you'll have forgotten what system you were supposed to be assessing. Completing an impact assessment like this would take weeks. It's a technocrat's dream and an engineer's nightmare.

You might think you need a dedicated taskforce to churn through that template for every AI application. But over-engineered compliance responses often lead to paperwork that doesn't change a system's design or outcomes. In complex organisations, there's a history of robust-on-paper processes that collapse in practice when they're isolated from real decision-making.

Australia's notorious Robodebt automated welfare-debt system had extensive formal controls and procedures, including documents eerily similar to an Impact Assessment2. Yet it failed catastrophically because critical warnings never reached the people with the power to act. Its thick stack of compliance documents was a facade, masking the lack of true oversight and feedback loops. More process doesn't automatically mean more safety, especially if that process lives in a silo.

Overly rigid, bureaucratic approaches to AI can create what I call "ethics theatre": elaborate documentation exercises that satisfy auditors yet do nothing to influence day-to-day engineering decisions. Any team considering ISO 42005 should focus on generating better outcomes, not just more paper. Auditors shouldn't be fooled by 50-page Impact Assessment documents that bear no relation to the systems actually built or how they're really operated. Even the best-intentioned AI Governance group can become a rubber-stamp factory if it's not woven into how products are actually built.

The solution isn't to build more rigidity and bureaucracy or create a silo of AI governance. Instead, weave AI governance into your organisation's existing fabric. Use the structures you already have: risk reviews, design approval meetings, quality gates. Augment them with AI impact considerations rather than spawning parallel hierarchies. When new AI-specific controls are needed, design them to complement and connect with existing governance rather than operating independently. This integrated approach takes effort, but it pays off by creating governance that works in practice, not just on paper.

Paperwork Without Purpose: The Pitfall of Over-Engineering

Why is bolting on a brand-new AI System Impact Assessment process so dangerous? Because process is only as good as its influence on real decisions. Time and again, I've seen over-engineered compliance responses result in beautifully formatted documents that no one reads, or reads too late to matter.

A common anti-pattern is treating impact assessments as a one-time checklist completed right before product launch, just to satisfy a requirement. At that point, all the big design choices have been made, the models trained, the contracts signed. The impact assessment becomes a retrospective formality rather than a living tool to shape the product. This defeats the purpose. It's precisely the scenario where teams put on critical glasses and ask "What could go wrong?" only after everything is built. Such late-stage checkbox assessments rarely result in changes; they mostly result in thick reports filed away in compliance vaults.

The spirit of ISO 42005 is actually to avoid this outcome by making impact assessment an integral part of AI risk management and system lifecycle. The standard explicitly guides organisations on integrating AI impact assessment into existing AI risk management and management systems rather than treating it as an isolated task. It emphasises performing assessments at appropriate times and coordinating with other existing processes like privacy or human rights impact assessments. ISO 42005 isn't asking for new bureaucracy. It's asking you to embed its practices into the way you already manage projects. But unfortunately with so much emphasis on process, documentation and templates, it can easily be interpreted the wrong way.

Here’s what I think is a better way. If your company has a risk committee, an architecture review board, or a compliance review stage, the question should be: how do we infuse AI impact thinking into those forums? Perhaps it's as simple as adding a few key questions to the template used in product proposal documents, or ensuring that any proposal involving AI triggers an "impact discussion" in the next risk meeting. By piggybacking on structures that teams are already familiar with, you minimise friction and avoid the "here comes the compliance police" effect that standalone processes often generate.

Over-engineering also tends to slow down innovation without a commensurate gain in safety. Teams faced with cumbersome new forms and committees may start seeing safe, responsible AI as a tax on progress. They might attempt to do the minimum to satisfy the requirement, rather than embracing it as a helpful tool. We want teams to perform impact assessments because it helps them foresee landmines and build better products, not because they fear an administrative penalty.

Here's the paradox: a very heavy process can reduce actual safety, because it encourages teams to route around it or treat it perfunctorily. A lightweight, well-integrated approach avoids this by fitting into the team's workflow and adding value to their decision-making at the right times. But what would that look like?

A Lightweight Approach: Impact Assessment as a One-Page Checklist

So what's the alternative? My advice is to use a practical, streamlined approach to AI impact assessment that is lightweight, outcome-focused, and integrated from day one. I'd take a one-page checklist used as a catalyst for conversation and reflection over a 30-page report no one has time to write or read.

A one-page template focuses on the critical questions that actually shape design choices. It's concise enough to be filled out in a brainstorming meeting, yet rigorous enough to surface the major ethical and risk considerations. Crucially, this one-pager isn't a tick-box exercise (ironically, a 30-page report completed at the end of development is far more likely to be tick-box theatre). It's a prompt for meaningful discussion among the people building the system.

A project lead or product manager can draft it, but it should be discussed by a cross-functional team: engineers, product designers, risk officers, lawyers, business stakeholders. And crucially, this happens early in the project when there's still time to act on what you discover.

For my proposed checklist, I've based it on Microsoft's AETHER principles—the areas of focus used by Microsoft's AI ethics committee. These principles have been battle-tested in real product development at scale, which makes them practical rather than purely theoretical. The checklist fits on one page, so by design it forces prioritisation of the most salient points. You can always attach more detailed analysis if needed, but the core stays succinct.

Now to be clear, I made up these questions from guesswork - this isn’t Microsoft’s checklist. (Admittedly 16 years of direct experience within Microsoft, helping embed good governance into the engineering process gives me some insight into what it might be). But you could just as easily adapt this to whatever principles are important in your organisation

One page, focused on the critical factors of AI impact. You might be thinking this sounds familiar—similar to privacy impact assessments and other structured review tools. There's good reason for that. It should align with well-known methods so teams aren't learning entirely new frameworks.

Here's the key insight: standards like ISO 42005 and frameworks like IEEE 7010 can work behind the scenes to ensure your checklist is comprehensive without making it cumbersome. IEEE 70103 focuses on assessing well-being impacts of AI systems, emphasising identification of unintended users, uses, and impacts on human well-being. ISO 42005 was developed to cover individuals and societies affected by an AI system's intended and foreseeable uses. The questions in our one-page checklist capture exactly these considerations. They just do it in a digestible way that teams will actually use.

This approach lets you truthfully say you're addressing ISO 42005's requirements, not by slavishly following a new bureaucracy, but by demonstrating that every AI project gets assessed for impacts in a consistent, structured manner. Simple doesn't mean superficial. It means focused on what matters.

Early and Often: Using the Checklist Without Slowing Delivery

A key advantage of the one-page checklist approach is its agility. Because it’s lightweight, teams can use it early and repeatedly without feeling bogged down. In practice, I think you should introduce the impact checklist in the ideation or design phase of a project, as soon as you have a concept of what the AI will do. This might even be at a storyboarding session or initial architecture design review. The checklist at this stage will prompt questions and maybe some quick research or testing (e.g. “Hey, do we have any data that could be biased? Let’s double-check that before we proceed.”). By catching such issues at the whiteboard stage, you prevent costly rework later.

It’s the AI equivalent of “shift-left” in software quality: address problems while everything is still on paper and easy to change. I noticed that Microsoft’s Responsible AI program, requires an impact assessment be completed early in development, when defining the product concept, not at the last minute. Teams at Microsoft must fill out an impact assessment template and have it reviewed before they get too deep into building4. I don’t know - perhaps they do fill out a form as exhaustive as ISO42005: Appendix E at the envisioning stage, but somehow I doubt it (they wouldn’t have most of the information available to do so at that stage).

The checklist I’m suggesting isn’t one-and-done. Think of it as a living document that can be revisited at major project milestones. For instance, check in on it during model training/selection (“Have new risks emerged with this model choice?”), before user testing (“Did our pilot reveal any unintended negative impacts?”), and before launch (“Final review – are all mitigation actions in place?”). Each time, it shouldn’t take long to update the checklist and discuss. By integrating these checkpoints into the project timeline (perhaps aligning with existing stage-gate reviews or demos), you make impact assessment a habit, not a hurdle. Teams remain aware of their ethical guardrails throughout development, which actually accelerates delivery in the long run: it’s much faster to tweak a design early than to recall or patch a product post-launch due to an oversight. Early rigorous assessment can prevent embarrassing rollbacks or public failures that cause far bigger delays. In other words, doing it right the first time is the fastest route to success.

The Cost of Siloed or Token Efforts: Apple Card’s Lesson

Don't get me wrong. I think assessing impact and making decisions about how to avoid negative impacts is essential, too important to allow it to become a silo or an afterthought.

What happens when you treat impact assessment as an afterthought? The Apple Card saga provides a telling example. When Apple launched its AI-driven credit card, customers quickly discovered that women were receiving far lower credit limits than men with similar financial backgrounds. Apple and Goldman Sachs'5 6 response was essentially "the algorithm doesn't use gender as an input, so it can't be discriminatory."

This "fairness through unawareness" defence completely missed the point. By failing to actively look for indirect bias, they had no insight into how their model treated different demographics. The result: public outcry, regulatory investigation, and lasting reputational damage for Apple—a brand that carefully manages its image.

A proper impact assessment would have flagged this during development: "creditworthiness model—check for gender bias, test on subgroups, ensure explainability." Instead, they ended up doing crisis management under far less favourable conditions. If you don't integrate impact and risk checks into AI development from the earliest stages, reality will integrate them for you via scandal or failure.

Standards like ISO 42005 and IEEE 7010 are extremely useful—but only if implemented thoughtfully. They provide structured ways to think about AI impacts and can ensure you're not missing major categories of risk. But don't treat compliance with the standard as the finish line. The goal isn't to generate an ISO 42005-compliant document and celebrate. The goal is to actually reduce the risk of harm from your AI system and increase its positive value.

Use these standards as checklists for your checklist. Ensure your one-page template covers the areas they expect: societal impacts, stakeholder identification, well-being outcomes. Map your internal process to these standards if needed—annotate your impact assessment template to show which section of ISO 42005 it satisfies.

But don't create a separate, heavyweight "ISO 42005 AI system impact assessment process" distinct from your normal product risk process. When audit time comes, you can confidently show how your integrated process meets the standard's expectations. This demonstrates that AI governance is part of your company's DNA, not a bolted-on appendix.

Actionable Steps to Operationalise ISO 42005

So here are my practical recommendations for organisations looking to respond to ISO 42005 without falling into the silo trap:

Integrate with Existing Risk Governance: Start by mapping out your current risk and quality processes (e.g. project risk assessments, design reviews, compliance checks). Figure out where AI impact assessment questions can be slotted in naturally. For instance, add an “AI Impact” section to the template used for architectural reviews or product approval forms. This ensures discussions happen in the forums teams already know.

Adopt a One-Page AI Impact Checklist: Use a concise checklist (like the one outlined above) as the primary tool for AI impact assessment. Make it standard practice for every AI-related project to complete this checklist at kickoff and update it periodically. Host the checklist in your existing project documentation system so it’s visible and version-controlled.

Embed Cross-Functional Review Early: Don’t isolate the impact assessment with one “Responsible AI Officer.” Instead, involve the project team and relevant domain experts in reviewing it. If you have a security review board or privacy committee, loop them in when relevant rather than convening an entirely separate panel. Early engagement of legal, compliance, and domain experts will catch issues and also educate the project team on those considerations.

Use Tools for Efficiency: A lightweight approach can still use tools - automating parts of the assessment (like generating bias metrics or robustness stats) can integrate it further into the engineering workflow. It feels less like documentation and more like testing.

Ensure Leadership Support and “Teeth”: Management must reinforce that this integrated impact assessment is not optional. There should be teeth to governance – if a high risk is identified and left unmitigated, leaders should be ready to delay a launch or mandate a fix: “Any project with an unresolved red-flag on the impact checklist must be approved by the Risk Committee before release.” Governance must be empowered to shape decisions, not just create artifacts, or perform hand-waving assurance to clients. Teams should know that ignoring the checklist isn’t an option, and completing it honestly is rewarded.

Continuously Improve the Process: Gather feedback from teams on the checklist’s effectiveness. Maybe some questions seem irrelevant or new issues (like environmental impact or supply-chain ethics) need to be added as the field evolves. Update your process and templates in line with evolving standards (ISO 42005 will evolve too) and learnings from any incidents your organization experiences or observes in the industry.

Standards and regulations will keep emerging in the AI space (the EU AI Act, IEEE guidelines, future ISO norms). I find that the organisations I work with that thrive are those that internalise the principles behind these rules and implement them in agile, practical ways. They’re not the ones with the fat compliance manuals or even honestly the ones with certifications and reports – they’re the ones where engineers, data scientists, product managers, lawyers and risk officers talk to each other regularly and candidly about “what could go wrong” and “how do we make this better.” Impact assessment, done right, is a powerful tool for innovation, not a brake on it. It shines light on blind spots early, leading to AI systems that are more robust, equitable, and worthy of users’ trust.

Keep it outcome-focused, keep it integrated. In the end, the measure of success won’t be how well you filled out a 30 page report that nobody reads – it will be whether your AI systems operate with fewer nasty surprises and greater benefit to society.

Thank you for reading this. I'm grateful to my clients and fellow AI practitioners who've shared their perspectives and insights with me over the past few weeks as I've developed these ideas. Your real-world perspectives have made this article and the checklist so much better.

I hope you find this useful in your own AI governance journey. If you have feedback, suggestions, I'd treasure hearing from you. And as always if you're interested in more practical guidance on implementing AI responsibly in your organisation, please do subscribe to ethos-ai.org for future updates and resources.

https://www.iso.org/standard/44545.html

https://ieeexplore.ieee.org/document/9283454

https://cdn-dynmedia-1.microsoft.com/is/content/microsoftcorp/microsoft/final/en-us/microsoft-brand/documents/Microsoft-Responsible-AI-Standard-General-Requirements.pdf?culture=en-us&country=us#:~:text=,system%27s%20development%2C%20typically%20when

https://www.washingtonpost.com/business/2019/11/11/apple-card-algorithm-sparks-gender-bias-allegations-against-goldman-sachs/

https://www.rand.org/pubs/commentary/2019/11/did-no-one-audit-the-apple-card-algorithm.html#:~:text=policing,automatically%20render%20the%20algorithm%20unbiased

Well done. Thank you for the reminder… “internalise the principles behind these rules and implement them in agile, practical ways.”

ISO/IEC 42001 is the framework standard for AI Governance and includes the harmonisation of ISO 27001 and 9001, plus the knowledge and experience from implementing data governance. It's not New Bureaucracy, it is build on existing standards to work in harmony.

Having been involved with The AI Standards Hub, which is one of the groups involved in drawing up global standards in the absence of legislation (with the exception of EU AI Act), I know that the AI standards coming out (and the ones currently in development) are produced in such a way to limit Bureaucracy and to optomise ethical AI development.