AI Governance Mega-map: Security and Privacy

Diving into the technical control domains for our mega-map of AI governance compliance drawing from ISO420001/27001/27701, SOC2, NIST RMF AI and the EU AI Act

In my previous article 1, I went through the foundational domains of AI governance - the leadership structures, risk frameworks, and regulatory operations that provide the scaffolding for responsible AI development. But having strong leadership and clear policies isn't enough on its own. We need to translate those high-level commitments into robust technical safeguards that protect both systems and stakeholders.

In this article, I'll go onto the next two critical technical domains that do exactly that: Security and Privacy. These domains represent the practical implementation of our governance principles - where high-level policies start to meet concrete technical controls. Security controls protect AI systems from tampering and manipulation, while privacy safeguards ensure responsible handlin of personal data.

The interconnected nature of these domains reflects the complexity of modern AI systems. A security breach can compromise privacy, while weak privacy controls can enable harmful uses and even create security vulnerabilities. By examining and mapping these domains together, we'll see how they work in concert to create comprehensive protection.

Let's dive into each domain in detail.

Security

SE-1 Security Governance, Architecture and Engineering

The organisation shall establish and maintain a comprehensive security governance framework that encompasses security risk management, security policies, standards, and architectures that guide the implementation of security controls across the organisation. The organisation shall ensure continuous monitoring of control effectiveness, manage security incidents, and maintain business continuity capabilities while overseeing third-party security requirements.

SE-2 Identity & Access Management

The organisation shall implement and maintain comprehensive identity and access management controls governing authentication, authorisation, and access monitoring across all systems and applications. This includes the complete lifecycle of identity management from screening to provisioning through deprovisioning, ensuring appropriate access levels are maintained and regularly reviewed. The organisation shall implement strong authentication mechanisms and maintain detailed access logs for all critical systems.

SE-3 Software Security

The organisation shall ensure all software development and deployment activities follow secure development practices throughout the system development lifecycle. This includes implementing secure coding standards, conducting security testing, managing secure configurations, and maintaining robust change management procedures for all production systems. The organisation shall regularly assess applications for security vulnerabilities and maintain secure development environments.

SE-4 Data Security

The organisation shall protect data throughout its lifecycle using appropriate technical and procedural controls, including classification, encryption, and secure handling procedures. This encompasses structured and unstructured data across all storage locations and transmission paths. The organisation shall maintain comprehensive data protection mechanisms, including backup systems, encryption standards, and secure disposal procedures, while ensuring appropriate data classification and handling requirements are enforced.

SE-5 Network Security

The organisation shall implement and maintain comprehensive network security controls to protect against unauthorised access, ensure secure communications, and maintain the confidentiality and integrity of data in transit. This includes implementing secure network architectures, maintaining network monitoring capabilities, and ensuring appropriate network segmentation. The organisation shall regularly assess network security controls and maintain comprehensive network logging and monitoring capabilities.

SE-6 Physical Security

The organisation shall implement and maintain comprehensive physical security controls to protect information assets, including facilities, equipment, and supporting infrastructure from unauthorised access and environmental threats. This includes maintaining secure physical perimeters, implementing environmental controls, monitoring physical access, and ensuring appropriate protection for equipment and supporting infrastructure.

Security remains one of the most pivotal and complex domains in AI governance, because you have to figure out how to safeguard not only traditional IT infrastructure but also the unique components of AI systems—models, training data, and specialised hardware. ISO 27001 and ISO 27701 provide foundational security and privacy best practices, while SOC2 emphasises rigour in control effectiveness, continuous monitoring and cross-functional collaboration. Although ISO/IEC 42001 and the EU AI Act do not list discrete “security controls” in the same way, they both stress that high-risk AI systems demand protective measures to guard against emergent threats like adversarial attacks. Security is somewhat implied. Similarly with the NIST RMF AI, because security is addressed by many other NIST standards, in particular NIST 800-532. It’s a job for another day to map these master controls to the vast library of that standard though.

Security Governance, Architecture, and Engineering (SE-1)

At the highest level, a comprehensive security governance framework sets the tone for how an organisation manages threats, incidents, and ongoing compliance. ISO 27001 (A.5, A.6, A.7, A.12, A.17, and A.18) collectively prescribe policies, standards, and architectures to guide security controls, while ISO 27701 (14.2.1 and 14.2.2) extend these principles to incorporate privacy considerations. SOC2 similarly underscores the importance of documented governance structures, continuous monitoring, and security incident response (CC2, CC3, CC7). In an AI context, these controls need to take into account some particular AI security risks—such as the possibility that machine learning models can be manipulated via training data or exploited to leak proprietary information. Ensuring that security governance explicitly covers AI engineering processes—like model versioning, testing, and deployment pipelines—can help organisations move beyond generic IT governance to address AI-specific vulnerabilities.

Identity & Access Management (SE-2)

Strong identity and access controls are needed to prevent unauthorised manipulation of AI models or data. ISO 27001 (A.9.1–A.9.4) outlines user provisioning, least-privilege principles, and privileged access reviews, while ISO 27701 (9.2.1, 9.2.2, 9.2.4) adds further data protection requirements for personal information. Under SOC2 (CC6), organisations also need to keep detailed access logs around authentication mechanisms. AI systems often process large, sensitive datasets, so it is crucial to implement robust credentialing and monitoring that ensure only authorised, screened personnel can alter training data, adjust model hyperparameters, or deploy new versions. Detailed logs of these activities become critical when investigating anomalies or suspected adversarial interference.

Software Security (SE-3)

Secure software development must account for both conventional application code and specialised machine learning pipelines. ISO 27001 (A.14.1–A.14.2, A.12.2) addresses secure coding, change management, and testing procedures, while ISO 27701 (14.2.1, 14.2.2) integrates privacy engineering practices into the development lifecycle. SOC2 (CC5, CC7) reinforces these requirements by requiring continuous testing, vulnerability scanning, and robust deployment practices. Going beyond typical software concerns—like SQL injection or buffer overflows—AI developers have to guard against adversarial attacks such as data poisoning, where malicious inputs degrade a model’s performance or cause it to behave unexpectedly. Incorporating secure coding reviews, specialised AI threat modelling, and adversarial testing within the software development lifecycle can help mitigate these novel risks.

Data Security (SE-4)

AI systems of course depend heavily on data, making data security—covering everything from encryption and classification to secure disposal—especially critical. ISO 27001 (A.8.1–A.8.7, A.10.1–A.10.2, A.12.4–A.12.5, A.14.3) lays out asset management, cryptographic controls, and operational procedures, while ISO 27701 (8.2.4, 8.3.1–8.3.3, 10.2.1–10.2.2, 11.2.2) adds privacy-specific obligations. SOC2 (CC6, P5) further highlights classification standards, encryption at rest and in transit, and secure disposal. In an AI context, you have to be vigilant not only about data “in use” (for training or inference) but also about the intermediate states—like model checkpoints—where sensitive information might be inferred or exfiltrated. Proper data classification helps identify which datasets are too sensitive for certain AI projects, while robust encryption and secure storage reduce the risk of unauthorised access or leaks.

Network Security (SE-5)

As AI workflows often span distributed systems, from edge devices to cloud-based training clusters, network security measures have to be in place for secure communications and robust segmentation. ISO 27001 (A.13.1–A.13.3, A.12.4, A.12.6, A.10.1–A.10.2) mandates controls for network segregation, intrusion detection, and cryptographic protections, and ISO 27701 (13.2.1) extends these to personal data in transit. Meanwhile, SOC2 (CC6.6, CC7) focuses on continuous monitoring and anomaly detection. In an AI environment, these controls should address the risk of unauthorised data injection into training pipelines or the exfiltration of proprietary models. Organisations may need additional monitoring to detect unusual patterns of data transfer—like large-scale downloads of training sets or repeated attempts to query an AI model in ways that could reveal sensitive parameters.

Physical Security (SE-6)

Physical threats, though sometimes overlooked in AI discussions, remain just as real for compromising data or hardware. If an attacker gets physical access, all bets are off. ISO 27001 (A.11.1–A.11.5) provides measures for secure areas, environmental controls, and equipment security, while ISO 27701 (11.2.1) reinforces these measures for data-bearing media. SOC2 (CC6.1) likewise stresses limiting physical access to authorised individuals only. With AI hardware often residing in specialised data centres or labs equipped with high-value GPU/TPU clusters, physical breaches could enable attackers to extract or tamper with sensitive training data or model weights. Rigorous physical controls—including biometric access, video surveillance, and environmental monitoring—make sure that these systems remain both operationally sound and resistant to theft or sabotage. To be realistic - it’s unlikely the data centre is a target for physical attack, fair easier to walk into an open office or steal a laptop from a data scientist. So don’t focus only on the datacentre - most physical security attacks happen on the person or an office environment.

Privacy

PR-1 Privacy by Design and Governance

The organisation shall implement privacy by design principles in all AI systems, ensuring privacy considerations are embedded from initial planning through system retirement. This includes establishing and maintaining comprehensive privacy policies, conducting privacy impact assessments, defining clear privacy roles and responsibilities, and integrating privacy requirements into project management processes. The organisation shall establish privacy governance structures, maintain documentation of privacy decisions, regularly review privacy controls for effectiveness, and ensure special categories of personal data are processed only when strictly necessary and with appropriate safeguards. Senior management shall demonstrate commitment to privacy through resource allocation and oversight of privacy initiatives.

PR-2 Personal Data Management

The organisation shall implement operational processes for the responsible collection, use, storage, and disposal of personal data in AI systems. This includes maintaining data inventories, implementing data classification schemes, managing data retention schedules, and ensuring appropriate data handling throughout the information lifecycle. The organisation shall obtain and maintain records of consent for data processing, provide individuals with access to their data, implement processes for handling data subject requests, and ensure data quality standards are maintained. Clear procedures for data minimisation, purpose limitation, and secure disposal shall be established and followed.

PR-3 Privacy Compliance and Monitoring

The organisation shall establish processes to monitor compliance with privacy requirements, detect and respond to privacy incidents, and ensure continuous improvement of privacy controls. This includes conducting regular privacy audits, monitoring data processing activities, managing privacy incidents, ensuring supplier compliance with privacy requirements, and maintaining business continuity plans that address privacy considerations. The organisation shall implement privacy metrics, conduct regular assessments, comply with breach notification requirements, and demonstrate ongoing compliance with applicable privacy regulations through documented evidence.

PR-4 Privacy-Enhancing Technologies and Mechanisms

The organisation shall implement appropriate technical mechanisms and privacy-enhancing technologies in AI systems to protect personal data and ensure privacy by default. This includes implementing encryption for data at rest and in transit, role-based access control mechanisms, data minimisation techniques, anonymisation and pseudonymisation methods, and secure deletion capabilities. The organisation shall ensure these mechanisms are appropriate for the sensitivity of the data, regularly tested for effectiveness, and updated as privacy-enhancing technologies evolve. This includes how technical controls are documented, validated, and integrated into the system architecture to provide defense in depth for privacy protection.

Privacy in AI systems represents a fascinating intersection of individual rights, technical innovation, and ethical responsibility. While many of the same principles from traditional data protection still apply, AI brings new complexities, such as the capacity to infer sensitive traits from seemingly benign data. Standards like ISO 27701 offer detailed guidance on integrating privacy into an organisation’s existing information security management system, while the EU AI Act calls for safeguarding personal data in high-risk AI contexts and emphasises transparency and accountability, while rightly leaving most privacy references to the already well established GDPR (I chose not to map to GDPR, largely because of the very strong overlap between ISO27701 and GDPR). SOC2 provides operational criteria much more focused on monitoring of control effectiveness. Taken together, although neither ISO27701 nor SOC2 make any specific reference to AI, they still provide a strong lifecycle approach to privacy that can be applied from initial data collection and model training to deployment, monitoring, and eventual retirement of AI systems.

PR-1: Privacy by Design and Governance

Privacy by design has emerged as a central theme in AI governance. ISO 27701 (6.1.1, 6.1.2, 6.2.1–6.2.4, 7.2.1–7.2.3, 7.2.5, 7.2.7, 12.2.1, A.7.4.1–A.7.4.9, B.8.4) provides a roadmap for embedding privacy considerations at every stage—from initial planning to eventual decommissioning. These clauses cover essential activities like privacy impact assessments, defined privacy roles, and ongoing reviews of privacy controls. In parallel, the EU AI Act (Articles 10.5, 26.9, 27.4) stresses that organisations must address privacy risks specifically in high-risk AI systems, mandating appropriate safeguards for sensitive categories of personal data. Of course, in reality the majority of regulatory requirements in Europe in this regard are governed by GDPR and other international equivalents. SOC2 (P1.1–P1.3) echoes the importance of establishing governance structures that document and regularly review privacy decisions, ensuring that senior management allocates the resources needed to sustain privacy initiatives. In practice, this means integrating privacy requirements into project management processes so that privacy is not an afterthought but a fundamental design principle.

PR-2: Personal Data Management

The second privacy master control focuses on operational processes for collecting, storing, and disposing of personal data responsibly. ISO 27701 (8.2.1–8.2.4, 8.3.1–8.3.3, A.7.2.1–A.7.2.8, A.7.3.1–A.7.3.10, 7.4.5, A.7.5.1–A.7.5.4, B.8.2, B.8.3, B.8.4.2, B.8.5) provides detailed requirements on data inventories, retention schedules, consent records, and data subject access requests—making it clear that personal data must be tracked across its entire lifecycle. This aligns with the principle of “purpose limitation,” ensuring that AI models only use data for legitimate, stated objectives. In a high-velocity AI environment, robust data management also includes ensuring data quality: inaccurate or outdated data can lead to biased models and compromised privacy. SOC2 privacy criteria (P2.1–P2.2, P3.1–P3.3, P4.1–P4.3) reinforce how organisations have to implement clear procedures for data minimisation, anonymisation, and secure disposal—especially relevant if an AI project no longer needs a dataset for training.

PR-3: Privacy Compliance and Monitoring

Even the best-laid privacy plans require continuous oversight to ensure ongoing compliance and readiness for incidents. ISO 27701 (12.2.2, 15.2.1, 16.2.1–16.2.2, 17.2.1, 18.2.1–18.2.2, A.7.2.5–A.7.2.7, A.7.3.6, B.8.2.4, B.8.2.5) outlines processes for auditing privacy controls, monitoring data processing, and managing privacy incidents. This includes timely breach notification and documented evidence of compliance. In the AI realm, monitoring can be particularly challenging: models may evolve over time or infer new insights about individuals, making it essential to maintain real-time awareness of how data is processed. SOC2 (P6.1–P6.5) underscores the need for metrics, periodic assessments, and supplier compliance reviews, including making sure that third-party vendors or cloud providers also meet privacy obligations. This focus on transparency and accountability aligns with the EU AI Act’s broader call for oversight mechanisms that can adapt to emergent AI-specific risks.

PR-4: Privacy-Enhancing Technologies and Mechanisms

Lastly, the frameworks converge on the need for technical solutions—often called privacy-enhancing technologies (PETs)—that enable AI systems to learn from data while minimizing privacy risks. ISO 27701 (9.2.1–9.2.4, 10.2.1–10.2.2, 11.2.1–11.2.2, 13.2.1, 14.2.1–14.2.2, A.7.4.5, A.7.4.9, B.8.4.3) details how encryption, pseudonymisation, and secure deletion can be embedded into systems, while also addressing emerging PETs like differential privacy. SOC2 (P5.1–P5.6) reinforces that these technologies must be tested, updated, and validated, ensuring they remain effective against evolving threats. For AI specifically, PETs can include federated learning, which allows distributed model training without centralising sensitive data, or secure multi-party computation, enabling data collaboration without exposing raw personal information. By integrating these technologies, it may be possible for you to reduce the tension between using large datasets for AI training and maintaining strict privacy protections. Furthermore, these technical measures should be paired with robust documentation of how they are configured and audited—so that if regulators or stakeholders inquire, you can demonstrate leading edge approaches for how privacy is preserved.

So that’s five of our master controls covered - almost half way - leadership accountability, risk management, regulatory operations, security and privacy. Think of all those as building the essential infrastructure that ultimately supports responsible AI: the leadership vision that guides development, the risk frameworks that identify potential pitfalls, the regulatory processes that ensure compliance, and the technical controls that protect both systems and stakeholders.

In the next article, I'll go into two domains that sit at the very heart of responsible AI development: Safe & Responsible AI practices that prevent unintended harm, and System, Data & Model Lifecycle controls that ensure quality and accountability throughout an AI system's existence. I encourage you to subscribe for these upcoming insights. I’d love to lear what challenges you have encountered implementing these foundational controls in your organisation.

Thanks for reading!

Full Control Map Download

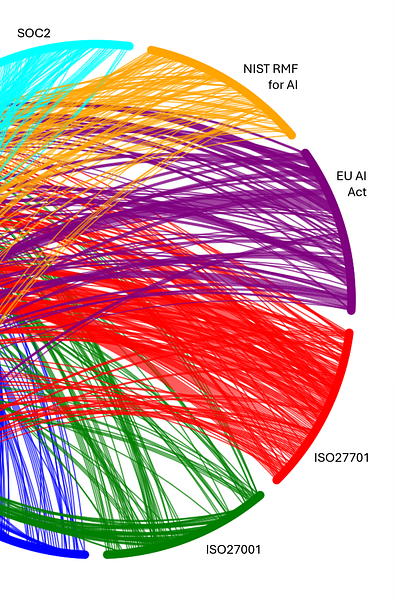

You can download the controls map in an Excel spreadsheet below. In the final article of this series though, I’ll share with you a full open-source repository of charts, mapping in JSON and OSCAL format, and python code so that you can modify this spreadsheet to your own needs, expand the frameworks included, generate mappings for export, and create chord diagrams like I’ve provided here. Please do subscribe, so I can notify you of that release.

I’m making this resource available under Creative Commons Attribution 4.0 International License (CC BY 4.0), so you can copy and redistribute in any format you like, adapt and build upon it (even for commercial purposes), use it as part of larger works, modify it to suit your needs. I only ask that you attribute it to The Company Ethos, so others can know where to get up to date versions and supporting resources.

https://www.ethos-ai.org/p/ai-governance-mega-map-leadership

https://csrc.nist.gov/pubs/sp/800/53/r5/upd1/final

Once again awesome contribution. 👏 And big thanks for licensing it under CC 4.0. Not sure if I'm capable enough something to contribute now but definitely something I wish to do in the future.

Insanely good! Can't imagine how long this took to build but great resource!