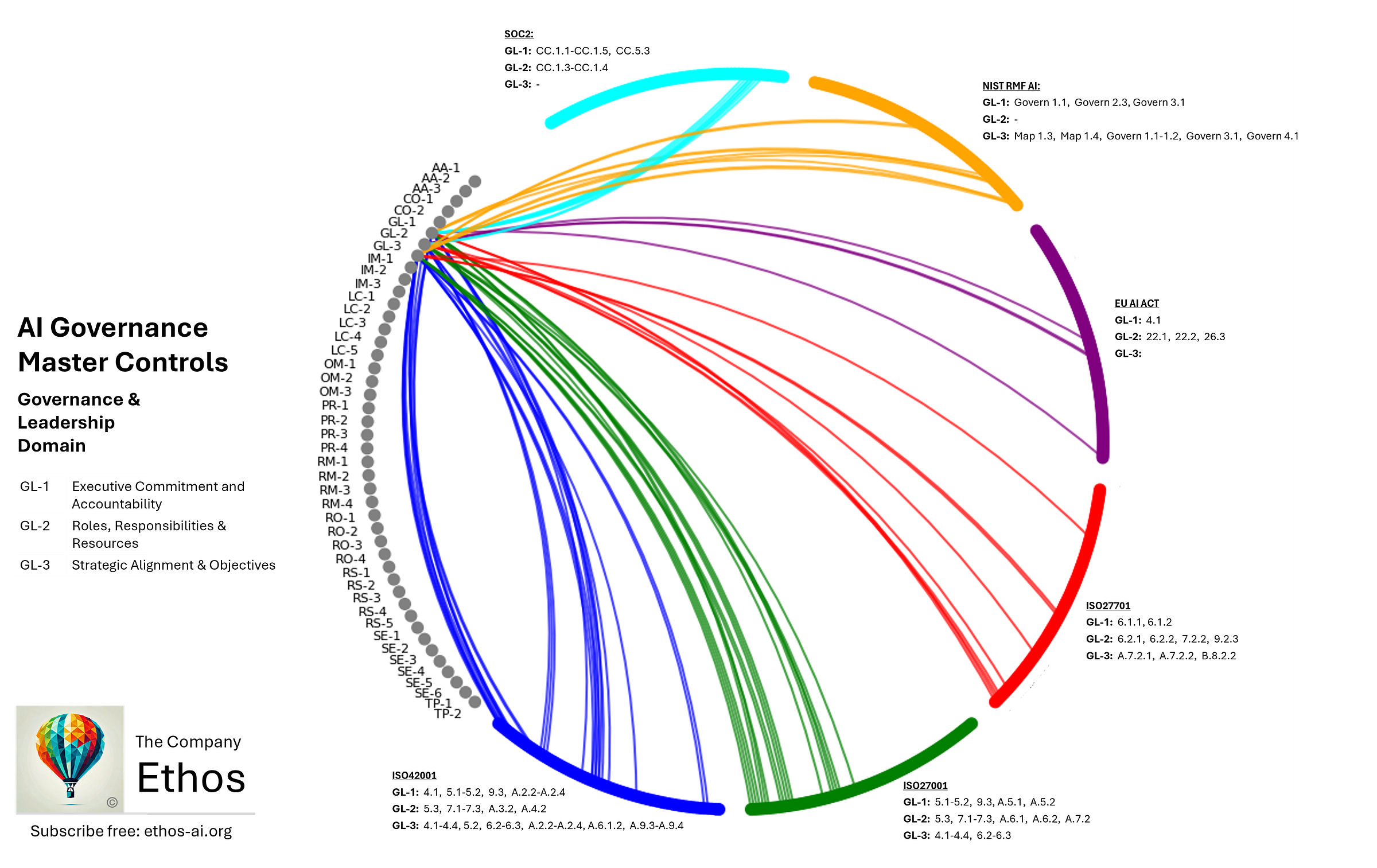

AI Governance Mega-map: Leadership, Risk Management and Regulatory Operations

Diving into the first 3 master control domains for our mega-map of AI governance compliance drawing from ISO420001/27001/27701, SOC2, NIST RMF AI and the EU AI Act

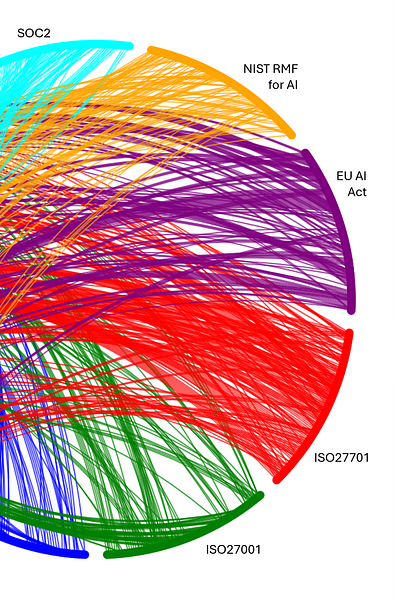

In my previous article1, I went through distilling over a thousand pages from 6 frameworks, standards, and regulations into a practical set of 44 master controls across 12 essential domains. It’s a task that I believe is absolutely essential for anyone planning a sustainable controls framework for AI governance - one that avoids drowning in a sea of overlapping requirements. I was really pleased to receive such positive feedback on both the approach and my method for visualisation.

So now it's time to roll up our sleeves and dive deeper into these domains, starting with the first three that form the foundation of any robust AI governance program. We begin with Governance and Leadership, which sets the tone for everything that follows. This domain is all about making sure the ownership and accountabilities are in place. From there, we move into Risk Management, where we have controls for identifying, treating and monitoring risks.

Regulatory Operations follows, transforming complex legal requirements into practical, reliable processes. This domain is particularly crucial as organisations face an ever-evolving landscape of AI regulations. The EU AI Act may just be the first, as it continues to heavily influence emerging regulations worldwide2.

Let's explore each of these domains in detail, understanding not just what they require, but how they work together to create a comprehensive approach to doing AI governance right - starting at the very top.

Plus - as many of you sought, I’ve attached a fully downloadable copy of the map at the end of the article.

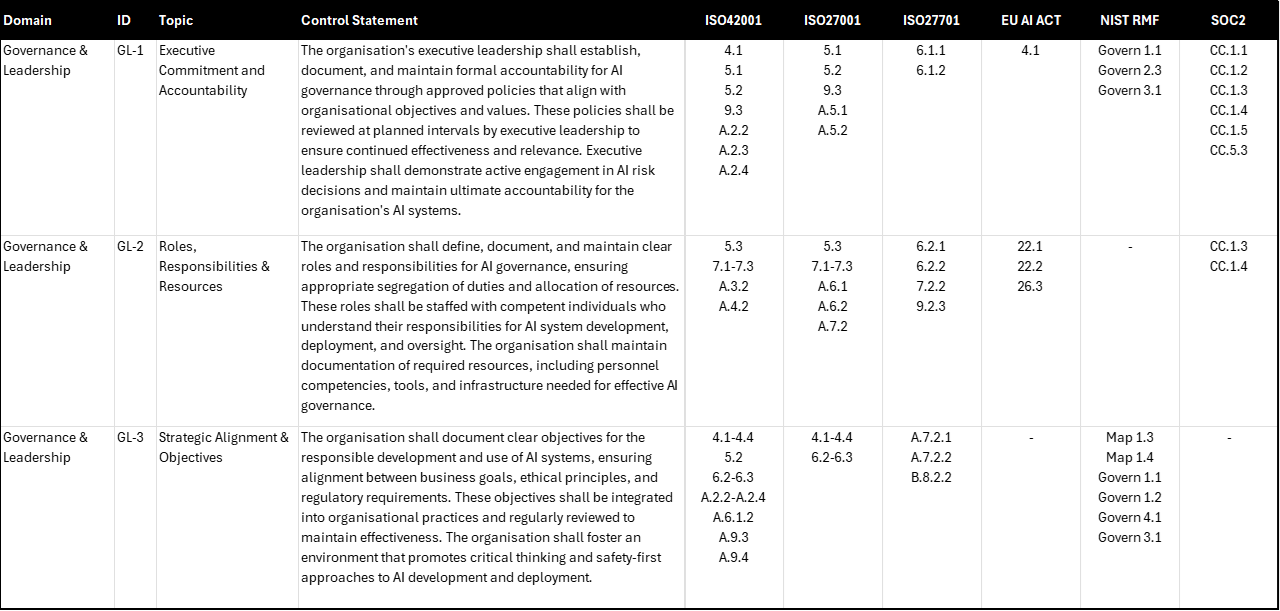

Governance & Leadership

GL-1 Executive Commitment and Accountability

The organisation's executive leadership shall establish, document, and maintain formal accountability for AI governance through approved policies that align with organisational objectives and values. These policies shall3 be reviewed at planned intervals by executive leadership to ensure continued effectiveness and relevance. Executive leadership shall demonstrate active engagement in AI risk decisions and maintain ultimate accountability for the organisation's AI systems.

GL-2 Roles, Responsibilities & Resources

The organisation shall define, document, and maintain clear roles and responsibilities for AI governance, ensuring appropriate segregation of duties and allocation of resources. These roles shall be staffed with competent individuals who understand their responsibilities for AI system development, deployment, and oversight. The organisation shall maintain documentation of required resources, including personnel competencies, tools, and infrastructure needed for effective AI governance.

GL-3 Strategic Alignment & Objectives

The organisation shall document clear objectives for the responsible development and use of AI systems, ensuring alignment between business goals, ethical principles, and regulatory requirements. These objectives shall be integrated into organisational practices and regularly reviewed to maintain effectiveness. The organisation shall foster an environment that promotes critical thinking and safety-first approaches to AI development and deployment.

GL-1 Executive Commitment and Accountability

Executive leadership commitment is emphasised in multiple standards such as ISO42001 (4.1, 5.1, 5.2, 9.3, A.2.2–A.2.4) and the NIST AI RMF (Govern 1.1, Govern 2.3, Govern 3.1). They highlight how critical it is to implement controls that ensure real executive oversight beyond paper policies into specific ongoing accountabilities. Notably, ISO 27001 (5.1, 5.2, 9.3, A.5.1–A.5.2) reinforces the role of leadership in setting clear direction and ensuring ongoing reviews, while SOC 2 Trust Criteria (CC.1.1–CC.1.5, CC.5.3) echo the need for documented accountability structures.

From an EU AI Act perspective, the focus on executive oversight (Article 4.1) aligns with the requirement that top management ensure AI risk decisions meet regulatory expectations. Likewise, ISO 27701 (6.1.1–6.1.2) addresses accountability for privacy-specific controls, reminding organisations that executive leaders must also be responsible for how AI systems handle personal data. By maintaining ultimate accountability, leaders can establish trust in AI governance practices and demonstrate their commitment through regular reviews, risk assessments, and visible leadership engagement in key AI decisions.

GL-2 Roles, Responsibilities and Resources

The challenge lies in connecting high-level leadership engagement to day-to-day operations. This requires defining and enforcing clear chains of command, documented responsibilities, and resource allocation— underscored by ISO/IEC 42001 (5.3, 7.1–7.3, A.3.2, A.4.2). ISO 27001 (5.3, 7.1–7.3, A.6.1–A.6.2, A.7.2) likewise details how roles should be assigned, while SOC 2 (CC.1.3, CC.1.4) highlights the importance of staffing those roles with competent individuals. Under the EU AI Act (Articles 22.1, 22.2, and 26.3 for high-risk AI systems), organisations must demonstrate that the right personnel and tools are in place to mitigate risks effectively—an approach also mirrored in ISO 27701 (6.2.1–6.2.2, 7.2.2, 9.2.3) for privacy-related responsibilities. It’s important to note that leadership accountability includes providing adequate resources - it’s not enough to simply assign accountabilities to a manager or team and not provide sufficient resources to perform their assigned function.

To operationalise these roles, organisations should maintain documented competencies and training programs that keep teams current on both technical and ethical considerations. These controls ensure the workforce has the right expertise for AI system development, deployment, and oversight, and that infrastructure—be it computing resources, toolchains, or data pipelines—can support effective governance.

GL-3 Strategic Alignment and Objectives

Most critically, organisations need to align business objectives, ethical principles, and regulatory requirements into a coherent framework. ISO/IEC 42001 (4.1–4.4, 5.2, 6.2–6.3, A.2.2–A.2.4, A.6.1.2, A.9.3–A.9.4) and ISO 27001 (4.1–4.4, 6.2–6.3) both stress the importance of embedding objectives into broader organisational strategy, ensuring that AI innovation does not come at the expense of responsible conduct. From the NIST AI RMF perspective (Map 1.3, Map 1.4, Govern 1.1, Govern 1.2, Govern 3.1, Govern 4.1), there is a clear emphasis on incorporating AI risk management into mission and goals. Meanwhile, ISO 27701 (A.7.2.1–A.7.2.2, B.8.2.2) and the EU AI Act push for a unified approach that respects privacy, safety, and transparency obligations.

When objectives are regularly reviewed and updated, leadership can proactively adapt governance to new threats or opportunities—whether they arise from technological breakthroughs or shifts in regulatory landscapes. This aligns AI programs with evolving ethical principles (e.g., fairness, explainability) and with documented standards of practice. Integrating these (sometimes) competing demands calls for thorough documentation of risk management processes and the rationale behind trade-off decisions, fostering an environment of critical thinking and safety-first development that percolates back up from front-line all the way to senior leadership.

Finally, in order to close the loop and ensure the accountability of leadership is maintained, the effectiveness of these controls needs to be tested and verified through internal assessments, external audits, and real-time monitoring—a recurring theme across all major frameworks. Although we address these requirements more specifically in the domain of Assurance and Audit, the connection back to executive leadership is necessary here via ISO/IEC 42001 9.3 and ISO 27001 9.3 which both emphasise periodic review by management of evaluations to ensure AI governance objectives remain effective. Under NIST AI RMF (Govern 5.1, 5.2), there is a need for collecting feedback from diverse stakeholders to refine and improve AI systems. By establishing continuous feedback loops, leadership can adjust governance processes based on actual performance data rather than assumptions, ensuring that policies remain aligned with both organisational goals and stakeholder expectations.

This iterative approach—where leadership oversight is grounded in solid frameworks and tested against real-world outcomes—helps organisations maintain responsible AI practices at scale and pace.

Risk Management

RM-1 Risk Management Framework and Governance

The organisation shall establish, document, and maintain a comprehensive risk management system covering the entire AI lifecycle. This system shall define clear roles, responsibilities, and processes for identifying, assessing, treating, and monitoring AI-related risks. The framework shall incorporate regular reviews by executive leadership and ensure risk management activities align with organisational risk tolerance. Risk management processes shall be transparent, documented, and appropriately resourced to maintain effectiveness.

RM-2 Risk Identification and Impact Assessment

The organisation shall conduct and document comprehensive impact assessments for AI systems, evaluating potential effects on individuals, groups, and society throughout the system lifecycle. These assessments shall consider fundamental rights, safety implications, environmental impacts, and effects on vulnerable populations. The organisation shall maintain a systematic approach to identifying both existing and emerging risks, including those from third-party components and systems.

RM-3 Risk Treatment and Control Implementation

The organisation shall implement appropriate technical and organisational measures to address identified risks, ensuring controls are proportionate to risk levels and organisational risk tolerance. Risk treatment strategies shall be documented and prioritised based on impact and likelihood, with clear accountability for implementation. The organisation shall maintain specific protocols for high-risk AI systems, including quality management systems and compliance verification processes.

RM-4 Risk Monitoring and Response

The organisation shall implement continuous monitoring processes to track the effectiveness of risk controls and identify emerging risks throughout the AI lifecycle. This shall include mechanisms for detecting and responding to previously unknown risks, regular evaluation of third-party risk exposure, and processes for incident response and recovery. The organisation shall maintain documentation of monitoring activities and ensure appropriate escalation paths for risk-related issues.

Risk management sits at the heart of responsible AI development, but traditional approaches can fall short when dealing with AI’s unique challenges. Unlike conventional software, AI systems can develop unexpected behaviours, exhibit subtle biases, or create unintended consequences that only emerge after deployment. This is why all of our six frameworks—from ISO/IEC 42001 (6.1) to the EU AI Act (Articles 9.1, 9.2)—emphasise the need for specialised, lifecycle-wide risk management frameworks, though they have a different interpretation of what risk means. ISO standards view risk in terms of uncertainty about achieving objectives, consistent with abroad interpretation of both upside and downside impacts, while the EU AI Act has a narrower interpretation of risk as harm. This distinction and the practices necessary for effective risk management of AI warrant a series of articles on their own (I’ll get to those as soon as we finish walking through control domains - stay tuned!)

RM-1 Risk Management Framework and Governance

In any case, the core requirement is to establish a documented and comprehensive risk management system covering the entire AI lifecycle, with clear roles, responsibilities, and processes for identifying, assessing, treating, and monitoring AI-related risks. ISO 27001 (6.1) and ISO 27701 (12.2.1, A.7.2.5, A.7.2.8, B.8.2.6) highlight the importance of executive leadership direction, participation and review to ensure alignment with organisational risk tolerance. From a NIST AI RMF perspective, Govern (1.3, 1.4, 1.5) and Map 1.5 require organisations to define risk management roles and accountability, while SOC2 (CC3.1) underscores that governance structures must be transparent and well-documented to maintain effectiveness.

RM-2 Risk Identification and Impact Assessment

A key challenge in AI risk management is looking beyond purely technical or cybersecurity threats to capture broader societal and ethical impacts. ISO/IEC 42001 (6.1.1–6.1.2, 6.1.4, 8.4, A.5.2–A.5.5) and ISO 27001 (6.1.2) both call for systematic identification of risks across the AI lifecycle. This includes potential effects on individuals, groups, and society, as well as vulnerable populations. In parallel, ISO 27701 (A.7.2.5, A.7.3.10, A.7.4.4) reinforces the need to consider privacy implications in impact assessments.

On the regulatory side, the EU AI Act (Articles 9.9, 27.1) highlights assessing safety, fundamental rights, and environmental impacts, particularly for high-risk systems. Meanwhile, NIST AI RMF categories such as Map 1.1, Map 3.1, Measure 2.6, and Measure 2.8 further emphasise ongoing evaluation of biases and emergent behaviours. Finally, SOC2 (CC3.2) monitors how these assessments are consistently documented and performed with a view toward transparency and accountability.

RM-3 Risk Treatment and Control Implementation

Identifying risks is only the beginning; organisations then have to demonstrate robust treatment strategies proportionate to the level of risk. ISO/IEC 42001 (Clause 6.1.3) and ISO 27001 (Clause 6.1.3) both require defined and prioritised controls based on impact and likelihood, ensuring clear accountability for implementation. ISO 27701 (A.7.4.1, A.7.4.2, A.7.4.4, A.7.4.5) points to specific data protection measures and compliance verification processes, critical for high-risk AI systems where privacy concerns are paramount.

In the EU AI Act (Articles 8.1, 8.2, 17.1, 9.3–9.5), this risk treatment is tied to formal compliance steps, especially for high-risk AI systems requiring quality management systems. The NIST AI RMF Manage subcategories (1.2, 1.3, 1.4) recommend organisations establish a dynamic, iterative approach—rather than static checklists—so that controls can adapt to evolving threats. SOC2 (CC5.1, CC9.1) further reinforces the need for documented controls as risk treatments and the ability to demonstrate they are operating effectively over time.

RM-4 Risk Monitoring and Response

What makes AI risk management particularly challenging is the potential for drift, emergent behaviours, and unforeseen interactions with other systems. ISO/IEC 42001 (6.1.3, 8.1–8.3) and ISO 27001 (6.1.3, 8.1–8.3) highlight continuous monitoring of risk controls, while ISO 27701 (A.7.4.3, A.7.4.9, B.8.2.4, B.8.2.5, B.8.4.3) calls for evaluating ongoing compliance and third-party risk exposure. In the EU AI Act (Article 9.6), organisations have to also consider how to detect and respond to new or previously unknown risks. One of the most dangerous risk practices I come across is the tendency to perform risk assessment as a one-off event - just one workshop at a point in time, followed by review, sign off and … neglect. This control is all about continuously monitoring and identifying risks.

From the NIST AI RMF standpoint, categories like Measure 3.1, Manage 2.1, and Govern 6.1 underscore the importance of incident response feeding into adaptive risk management. These controls need to be tested and validated through a combination of automated monitoring, human oversight, and systematic review—an approach that SOC2 (CC3.4, CC9.2) endorses as well. By maintaining clear escalation paths, organisations can swiftly respond to risk-related issues and keep stakeholders informed.

Regulatory Operations

RO-1 Regulatory Compliance Framework

The organisation shall establish, document, and maintain a comprehensive framework for ensuring compliance with applicable AI regulations and standards. This framework shall include processes for identifying relevant requirements, assessing applicability, implementing necessary controls, and verifying ongoing compliance. The organisation shall maintain systematic processes for tracking and implementing new regulatory requirements, conducting conformity assessments, maintaining necessary certifications, and ensuring timely renewal of compliance documentation. Special attention shall be given to high-risk AI system requirements and prohibited practices. The organisation shall implement processes to track changes that may affect compliance status and maintain evidence of continued conformity with legal obligations

RO-2 Transparency, Disclosure and Reporting

The organisation shall implement mechanisms to ensure appropriate transparency regarding AI systems, including clear notification of AI use, disclosure of automated decision-making, and communication of significant system changes. The organisation shall establish and maintain processes for reporting incidents, safety issues, and non-compliance to relevant authorities and affected stakeholders. This shall include clear procedures for incident detection, assessment, notification timelines, and follow-up actions.

RO-3 Record-Keeping

The organisation shall maintain comprehensive documentation and records demonstrating compliance with AI regulatory requirements. This shall include technical documentation, conformity assessments, impact analyses, test results, and evidence of ongoing monitoring. The organisation shall establish retention periods aligned with regulatory requirements, implement secure storage systems, and ensure documentation remains accessible to authorised parties throughout required retention periods.

RO-4 Post-Market Monitoring

The organisation shall implement comprehensive post-market monitoring systems for deployed AI systems, including mechanisms for tracking performance, identifying issues, and implementing corrective actions. This shall include processes for reporting incidents to relevant authorities, maintaining required documentation, and conducting periodic reviews of monitoring system performance. The organisation shall ensure appropriate escalation paths exist for identified issues and maintain clear procedures for implementing necessary corrective actions.

Regulatory operations is a domain that sits at the intersection of technical innovation and legal scrutiny, where you have to translate the complex capabilities of AI systems into clear, documented practices. Each of the six frameworks ranging from ISO/IEC 42001 to the EU AI Act underscore that effective regulatory operations require both precision in documentation—meeting rigorous legal standards—and the agility to keep pace with rapid technological advances. Easier said than done!

RO-1: Regulatory Compliance Framework

A robust regulatory compliance framework is the starting point upon which all other regulatory operations rest. ISO/IEC 42001 sets out a management system approach to AI following the successful precedents set by ISO 27001 (A.18.1) and ISO 27701 (18.2.1, A.7.2.1–A.7.2.4, B.8.2.1–B.8.2.2, B.8.2.4–B.8.2.5). These outline methods for identifying applicable regulations, assessing their impact, and ensuring conformity through systematic controls. Unsurprisingly, the EU AI Act is a dominant influence in this domain. In the Act (Articles 5.1, 5.2, 6.1–6.4, 8.1–8.2, 40.1, 41.1, 42.1, 43.1–43.4, 44.2–44.3, 47.1–47.4, 49.1–49.3), special attention is given to high-risk AI systems and prohibited practices, reinforcing among other things, the need for a continuous process of registration, compliance tracking and renewal of certifications.

From the NIST AI RMF Govern 1.1 and Map 4.1 emphasise establishing a structured governance process that monitors evolving requirements, ensuring that compliance is not a one-time exercise but an ongoing responsibility. SOC2 (CC1.5) also talks about continuous oversight, mandating that organisations maintain evidence of controls and systematically verify their ongoing effectiveness. Together, these requirements illustrate the need for a living regulatory compliance framework—one capable of adapting to new obligations without compromising innovation.

RO-2: Transparency, Disclosure, and Reporting

Regulators increasingly demand transparency around AI systems, including clear disclosure of automated decision-making and significant system changes. ISO/IEC 42001 (A.8.3, A.8.5) highlights the importance of structured communication channels for these purposes, while ISO 27001 (A.6.3) supports formal processes for notifying relevant parties. In ISO 27701 (6.2.3, A.7.3.2–A.7.3.3, A.7.3.8–A.7.3.9, A.7.5.3–A.7.5.4, B.8.5.3–B.8.5.6), the focus expands to privacy-specific disclosures and stakeholder reporting.

Under the EU AI Act (Articles 20.1–20.2, 50.1–50.5, 60.7–60.8, 86.1–86.3), organisations must provide clear notification of AI usage, especially when decisions carry legal, financial or similarly significant effects related to the fundamental rights of citizens. NIST AI RMF categories (Govern 6.1, Map 4.1) outline the need for consistent processes to communicate system updates or issues to both regulators and affected individuals. Meanwhile, SOC2 (CC2.3, P1.1–P1.3) underscores that transparency isn’t merely a policy statement—it’s a documented practice that demonstrates how and why decisions are made. This combination of requirements underscores the need for strong mechanisms to manage notifications, disclosures, and incident reporting.

RO-3: Record-Keeping

Regulatory operations hinge on the ability to produce verifiable records that demonstrate compliance and decision-making rationales. ISO/IEC 42001 provides implicit overarching guidance on record keeping, but ISO 27001 (A.7.2) focuses on the security and accessibility of these records. ISO 27701 (8.2.3, A.7.2.8, A.7.3.1, A.7.4.3, A.7.4.6–A.7.4.8, B.8.2.6, B.8.4.1–B.8.4.2) highlights retention policies and secure storage solutions, ensuring that data is protected throughout its lifecycle and remains accessible for audits or regulatory inquiries.

The EU AI Act (Articles 11.1, 11.3, 18.1, 19.1–19.2, 71.2–71.3) requires organisations to maintain evidence of system performance, risk assessments, and any measures taken to address non-compliance. NIST AI RMF references (Map 4.1, Measure 2.12) further detail how to structure documentation to capture both technical and ethical considerations in AI development. SOC2 (P3.1–P3.3) adds that these records should be secured and tamper-evident, ensuring that authorized parties can rely on them for an accurate picture of an AI system’s compliance status over time.

RO-4: Post-Market Monitoring

The most challenging aspects of regulatory operations often come after deployment. AI systems can drift, develop unexpected behaviours, or interact with real-world data in ways that create novel risks. ISO/IEC 42001 (A.8.3) recognises this need for ongoing vigilance, while ISO 27701 (6.2.3, A.7.3.6–A.7.3.7, A.7.3.10, A.7.4.3, B.8.3.1, B.8.5.7–B.8.5.8) addresses continuous monitoring for privacy-related incidents and system misconfigurations.

Under the EU AI Act (Articles 72.1–72.4, 79.4, 80.4–80.5), organisations must implement processes for post-market monitoring, including incident detection, reporting, and corrective action—particularly for high-risk AI systems. The NIST AI RMF (Govern 6.1, Measure 2.12) encourages proactive detection of issues and quick escalation to stakeholders and authorities. Meanwhile, SOC2 (CC1.5) ensures that post-market monitoring is regarded as an integral part of broader compliance. Effective post-market oversight thus closes the loop, allowing organisations to refine systems, address risks, and maintain a continuous cycle of improvement.

We cover Operational Monitoring as a separate domain, primarily because in most organisations it would be handled by a very different team, but this shows how the domains are connected. The lawyers and assurance professionals who handle regulatory operations need to stay in close collaboration with the engineers and incident responders who detect reportable incidents.

In exploring these first three domains, we've laid the essential groundwork for effective AI governance - from the top-down accountability of leadership through the systematic approach of risk management to the demands of regulatory operations. But this is just groundwork. In my next article, I'll dive into the more technical domains of AI governance – examining how security, privacy, and responsible AI practices come together to protect both systems and stakeholders. These domains build directly upon the foundation we've established, showing how high-level oversight translates into concrete technical safeguards.

A great deal of care needs to be taken in the design and implementation of these controls so that we don’t end up with a facade of beautiful documents that are unworkable in practice. I have in mind a series of articles going through these real-world practices, and will share those articles as soon as I can.

In the meantime, please do subscribe for more detail on the master controls and these upcoming insights. I'd love to hear your thoughts and experiences with implementing these foundations.

Full Control Map Download

You can download the controls mega-map in an Excel spreadsheet below. In the final article of this series though, I’ll share with you a full open-source repository of charts, mapping in JSON and OSCAL format, and python code so that you can modify this spreadsheet to your own needs, expand the frameworks included, generate mappings for export, and create chord diagrams like I’ve provided here. Please do subscribe, so I can notify you of that release.

I’m making this resource available under Creative Commons Attribution 4.0 International License (CC BY 4.0), so you can copy and redistribute in any format you like, adapt and build upon it (even for commercial purposes), use it as part of larger works, modify it to suit their needs. I only ask that you attribute it to The Company Ethos, so others can know where to get up to date versions and supporting resources. Thank you

https://www.onetrust.com/blog/south-koreas-new-ai-law-what-it-means-for-organizations-and-how-to-prepare/

As a sidenote, you’ll notice that these control statements contain multiple ‘shall’ imperatives. In a real implementation, you will likely break this into sub-controls (like GL-1-1, GL-1-2, etc), so that a control only has a single ‘shall’, but for the sake of simplicity in this analysis, I keep them together. Audit purists will tell you to have only one imperative per control, and they’re right!

That's top tier knowledge! Thanks for sharing!

Thank you SO much. I very much appreciate having access to this comprehensive report and tool.