AI Governance Mega-map: Assurance & Audit, Third-Party Suppliers and Transparency

The final control domains for assurance, third-party suppliers and transparency, concluding of our mega-map of AI Governance drawing from ISO420001/27001/27701, SOC2, NIST RMF AI and the EU AI Act.

We’re into the final chapter in our exploration of AI governance master controls. Through this series, I've mapped the complex terrain of responsible AI development, breaking down over a thousand pages of frameworks, standards and regulations into twelve essential domains with practical, implementable controls. From leadership accountability and risk management to responsible AI practices and incident response, we've built a comprehensive picture of what effective AI governance looks like in action. If you missed any of the articles, you can start at the beginning here:

In this final instalment, I'll complete our journey by examining the three remaining domains that form crucial connections between your organisation and the broader ecosystem: Assurance & Audit, Third Party & Supply Chain, and Transparency & Communication. These domains represent the bridges that connect your internal governance with external stakeholders, ensuring your AI systems earn trust not just through intention, but through verification, responsible partnerships, and clear communication.

These final domains close the loop on our governance framework, creating mechanisms to verify that controls are working as intended, extending accountability throughout your supply chain, and establishing the transparent communication necessary for building trust with users, customers, and regulators. As AI systems grow more complex and more deeply embedded in critical functions, these domains become essential safeguards that help maintain alignment between AI capabilities and human values.

Many of you have asked for the tools I've been using to create these control mapping visualisations, and I'm happy to share that I've published everything as an open-source resource. You can now find the complete repository on GitHub at github.com/ethos-ai/governance-mapping-tools.

The repository includes the full mapping data that connects all 44 master controls to major frameworks like ISO 42001, the EU AI Act, NIST AI RMF, and others. I've also included the Python code that generates the chord diagrams you've seen throughout this series, making it easy to visualise the relationships between controls and frameworks. The beauty of having this as open source is that you can customise the mappings for your organisation's specific needs – perhaps you're working with additional frameworks or want to focus on particular control areas that matter most to your context. I share the links at the end of this article.

So let's dive into these final building blocks of comprehensive AI governance, examining how they work together to create AI systems that aren't just powerful, but worthy of the trust we place in them.

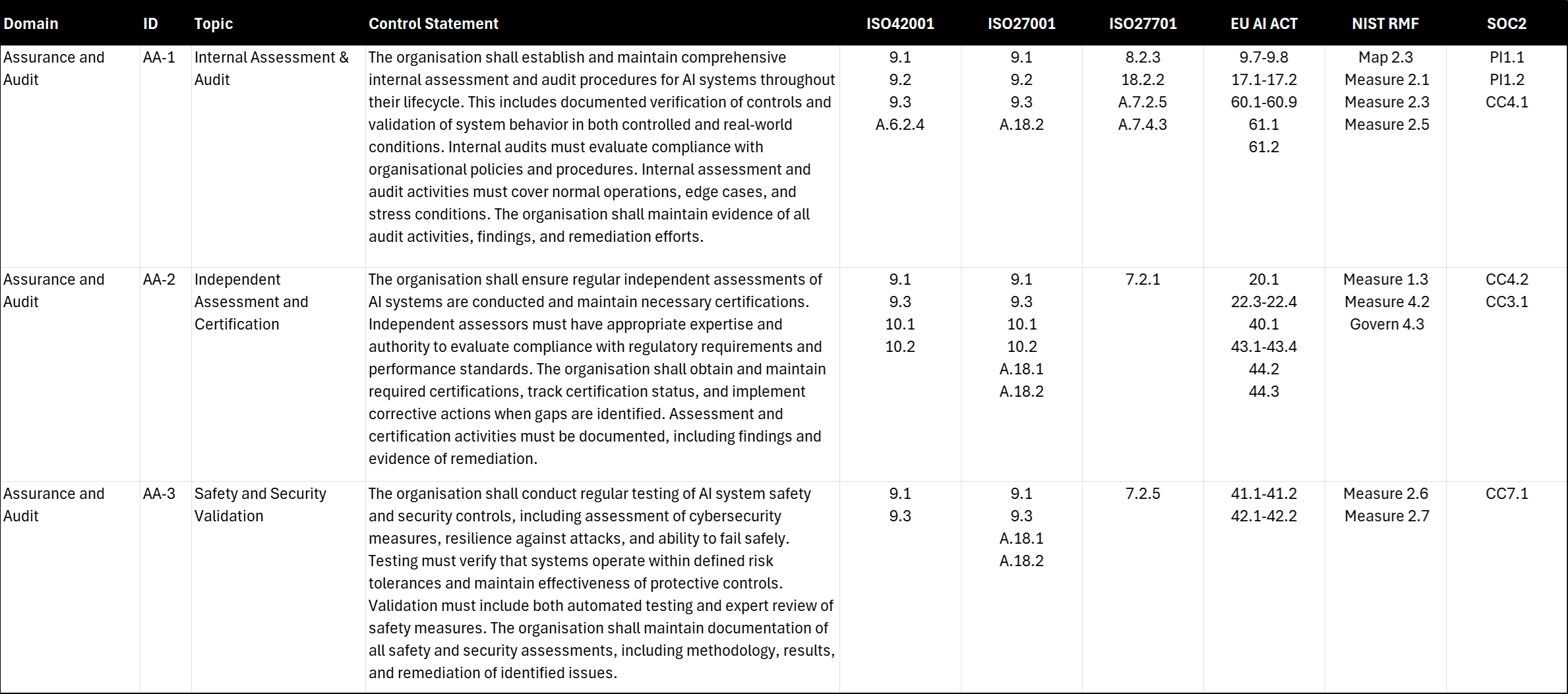

Assurance and Audit

AA-1 Internal Assessment & Audit

The organisation shall establish and maintain comprehensive internal assessment and audit procedures for AI systems throughout their lifecycle. This includes documented verification of controls and validation of system behavior in both controlled and real-world conditions. Internal audits must evaluate compliance with organisational policies and procedures. Internal assessment and audit activities must cover normal operations, edge cases, and stress conditions. The organisation shall maintain evidence of all audit activities, findings, and remediation efforts.

AA-2 Independent Assessment and Certification

The organisation shall ensure regular independent assessments of AI systems are conducted and maintain necessary certifications. Independent assessors must have appropriate expertise and authority to evaluate compliance with regulatory requirements and performance standards. The organisation shall obtain and maintain required certifications, track certification status, and implement corrective actions when gaps are identified. Assessment and certification activities must be documented, including findings and evidence of remediation.

AA-3 Safety and Security Validation

The organisation shall conduct regular testing of AI system safety and security controls, including assessment of cybersecurity measures, resilience against attacks, and ability to fail safely. Testing must verify that systems operate within defined risk tolerances and maintain effectiveness of protective controls. Validation must include both automated testing and expert review of safety measures. The organisation shall maintain documentation of all safety and security assessments, including methodology, results, and remediation of identified issues.

Assurance and Audit in the realm of AI goes well beyond traditional software verification or conventional annual audit cycles, demanding that organisations develop new methodologies and expertise to validate systems whose outputs are inherently probabilistic and whose behaviours may evolve over time. Frameworks such as ISO 42001, ISO 27001, ISO 27701, the EU AI Act, NIST AI RMF, and SOC2 converge on the principle that AI assurance must incorporate both rigorous technical evaluations and a broader consideration of societal impacts, ensuring that AI systems remain trustworthy, compliant, and aligned with organisational objectives throughout their lifecycle.

This requires a multi-layered approach that addresses the inherent uncertainty of machine learning models, the complexity of data pipelines, and the potential for significant societal impact. By implementing robust internal assessments (AA-1), engaging independent evaluators (AA-2), and conducting specialised safety and security validations (AA-3), organisations can gain confidence in their AI systems’ reliability, compliance, and alignment with ethical principles. High-integrity assurance isn’t just a box-ticking exercise but a meaningful process of verification and continuous improvement—empowering AI deployments that are both innovative and responsibly managed.

AA-1: Internal Assessment & Audit

Internal assessment is a first line of defence, providing an ongoing check on AI systems’ adherence to policies, controls, and ethical standards. ISO 42001 (9.1, 9.2, 9.3, A.6.2.4) emphasises continuous verification of AI behaviours in both controlled and real-world conditions, making sure that audits go beyond one-time checks to accommodate the adaptive nature of AI. ISO 27001 (9.1, 9.2, 9.3, A.18.2) complements this by detailing how organisations can integrate AI-specific assessments into broader information security audits. ISO 27701 (8.2.3, 18.2.2, A.7.2.5, A.7.4.3) extends these principles to privacy considerations, requiring that internal audits verify compliance with data protection requirements throughout the AI lifecycle. The EU AI Act (9.7–9.8, 17.1–17.2, 60.1–60.9, 61.1, 61.2) particularly focuses on how high-risk AI systems must have mandatory auditing of operational practices and safety assessment of model behaviour. NIST AI RMF (Map 2.3, Measure 2.1, Measure 2.3, Measure 2.5) underscores how these internal assessments should incorporate both technical and societal dimensions, while SOC2 (PI1.1, PI1.2, CC4.1) requires that all findings, remediation actions, and evidence be documented in a manner that is auditable and transparent. This may involve targeted assessments by specialists, or internal cross-functional teams conducting regular “checkpoints” that test everything from data integrity to model bias, documenting any deviations and driving timely remediation.

AA-2: Independent Assessment and Certification

Recognising that internal assessments and audits alone may not suffice, especially for high-stakes AI systems, the frameworks place particular emphasis on external, independent assessments. ISO 42001 (9.1, 9.3, 10.1, 10.2) calls for objective evaluations by assessors with the authority and expertise to validate compliance against regulatory requirements and industry best practices, and the supporting ISO42006 standard describes how that should be performed. ISO 27001 (9.1, 9.3, 10.1, 10.2, A.18.1, A.18.2) aligns with this by detailing how external certifications can confirm the effectiveness of an organisation’s overall security posture. ISO 27701 (7.2.1) extends these requirements to data privacy, ensuring that assessors consider both technical and privacy-related controls. Under the EU AI Act (20.1, 22.3–22.4, 40.1, 43.1–43.4, 44.2, 44.3), certain AI systems—particularly those deemed high-risk—must undergo mandatory conformity assessments, and organisations must document their certification status and any corrective actions taken to address identified gaps. It remains an open question as to what standard will apply to these conformance assessments1. NIST AI RMF (Measure 1.3, Measure 4.2, Govern 4.3) offers guidelines for robust external evaluations that account for model performance, data governance, and ethical considerations. Meanwhile, SOC2 (CC4.2, CC3.1) highlights how these independent assessments must encompass the entire AI operating environment, from data pipelines to incident response capabilities. These requirements are most likely addressed by engaging accredited assessors to perform on-site reviews, model evaluations, and documentation checks, resulting in certifications or attestations that validate the organisation’s adherence to responsible AI practices.

AA-3: Safety and Security Validation

Safety and security validation take on new dimensions in AI, as systems can fail in ways that traditional software security testing might not detect—such as data poisoning, adversarial inputs, or subtle performance degradation. ISO 42001 (9.1, 9.3) highlights the need for regular testing to confirm that AI systems remain within defined risk tolerances. ISO 27001 (9.1, 9.3, A.18.1, A.18.2) provides a baseline for evaluating the robustness of technical controls, and ISO 27701 (7.2.5) adds a privacy lens to these assessments, ensuring that data handling procedures meet relevant legal and ethical standards. The EU AI Act (41.1–41.2, 42.1–42.2) specifically mandates evaluations that address AI-specific threats, such as adversarial manipulation or drift, while NIST AI RMF (Measure 2.6, Measure 2.7) provides guidance on testing model resilience, analysing failure modes, and verifying that safety controls operate effectively under stress. SOC2 (CC7.1) reinforces that these validations must be documented, with clear evidence of testing methodologies, results, and remediation actions. In practice, this might involve specialised testing frameworks that simulate adversarial attacks or environmental changes, combined with expert reviews to confirm that systems can “fail safely” and that protective measures remain effective.

Third Party & Supply Chain

TP-1 Third-Party Provider Responsibilities

The organisation shall establish clear accountability for AI systems when working with third parties, distributors, importers, or suppliers. This includes documenting responsibilities when AI systems are modified or repurposed, ensuring proper handover of obligations between parties, and maintaining evidence of agreed responsibilities. The organisation must obtain necessary information and technical access from third-party suppliers to ensure regulatory compliance, while respecting confidentiality and intellectual property rights.

TP-2 Supplier Risk Management

The organisation shall implement comprehensive processes to identify, assess, manage, and monitor risks associated with third-party AI suppliers and service providers throughout the engagement lifecycle. This includes evaluating supplier capabilities during selection, establishing security and privacy requirements in supplier agreements, maintaining contingency plans for critical third-party dependencies, and implementing continuous monitoring of supplier performance and compliance. The organisation shall regularly assess supplier adherence to established requirements, including security standards, privacy requirements, and service level agreements. Performance monitoring must include collection and evaluation of feedback, documentation of monitoring results, and implementation of appropriate actions when issues are identified. Regular reviews of supplier risk assessments and performance metrics shall inform decisions about continuing or modifying supplier relationships.

Third Party & Supply Chain management for AI systems represents a new frontier where the complexities of artificial intelligence intersect with traditional vendor oversight. Unlike conventional software procurement, where functionality is often well-defined, AI systems can involve multiple components—models, data pipelines, and infrastructure—sourced from various providers, each bringing unique risks. The frameworks — ISO 42001, ISO 27001, ISO 27701, the EU AI Act, NIST AI RMF, and SOC2 — all recognise that organisations must adopt more sophisticated processes to maintain clear lines of accountability, evaluate AI-specific capabilities, and continuously monitor supplier performance throughout the AI lifecycle. This requires a somewhat more engaged approach than traditional vendor oversight with allocation of clear provider responsibilities (TP-1) and robust supplier risk management processes (TP-2) that are far from annual fire-and-forget processes.

TP-1: Third-Party Provider Responsibilities

AI systems rarely operate in isolation; they often integrate models, datasets, and services from third-party vendors, distributors, or importers. ISO 42001 (A.10.2) underscores the need for clearly documented responsibilities when AI systems are modified, repurposed, or handed off between parties, ensuring that obligations—such as regulatory compliance and risk management—remain transparent. ISO 27001 (A.15.1) provides a foundational framework for defining contractual obligations, while ISO 27701 (15.2.1, A.7.2.6–A.7.2.7, B.8.5.6–B.8.5.8) extends these obligations to data protection and privacy considerations. Under the EU AI Act (25.1–25.4), importers and distributors must assume specific responsibilities for ensuring that AI systems they place on the market comply with relevant requirements. NIST AI RMF (Map 4.1, Govern 6.1) advises organisations to perform a “supply chain decomposition,” mapping out each third-party component and documenting how accountability transfers among them. Meanwhile, SOC2 (CC2.3) highlights that contractual frameworks must respect confidentiality and intellectual property rights without sacrificing the ability to validate compliance and manage risks. Satisfying this control may involve detailed service-level agreements that specify both the technical access needed to assess AI components and the obligations each party holds for system integrity and ethical operation. It will likely also require ongoing engagement and a sustained direct relationship.

TP-2: Supplier Risk Management

Managing AI supply chains requires more than a simple vendor checklist; organisations must continuously evaluate the evolving risks introduced by third-party AI providers. ISO 42001 (A.10.3) calls for comprehensive processes to identify, assess, and mitigate these risks, ensuring that suppliers align with the organisation’s governance objectives. ISO 27001 (A.15.1, A.15.2) builds upon this by detailing how security and privacy requirements should be embedded in supplier agreements and by emphasising the importance of contingency plans for critical dependencies. ISO 27701 (15.2.1, A.7.2.6, A.7.5.1–A.7.5.2, B.8.5.1–B.8.5.2) applies these considerations to privacy-specific controls, ensuring that personal data used or processed by third parties remains protected. The EU AI Act (25.4) describes how all actors in the supply chain—from developers to deployers—share responsibility for compliance. NIST AI RMF (Govern 5.1, Manage 3.1, Govern 6.1, Govern 6.2) provides guidance on ongoing supplier assessments, from initial capability evaluations to continuous monitoring of performance and compliance. SOC2 (CC9.2) completes the picture by mandating documentation of supplier performance metrics, risk assessments, and decision-making around supplier relationships. In practical reality, an organisation might establish something like a dashboard to track key performance indicators, review logs for suspicious model behaviour, and regularly reassess suppliers’ adherence to security, privacy, and ethical standards.

Transparency & Communication

CO-1 AI System Transparency

The organisation shall ensure AI systems are designed and operated with appropriate transparency, enabling users and affected individuals to understand when they are interacting with AI, how the AI system impacts them, and what the system's capabilities and limitations are. This includes clear marking of AI-generated content, disclosure of automated decision-making, and provision of comprehensive documentation about system performance and intended use. The organisation must maintain accessibility standards in all transparency communications, provide explanations of AI-driven decisions when required by law, and ensure instructions and documentation are clear and accessible to intended audiences.

CO-2 Stakeholder Engagement and Feedback

The organisation shall implement comprehensive stakeholder engagement processes to collect, evaluate, and respond to feedback about AI system impacts and performance. This includes establishing mechanisms for regular stakeholder consultation, incorporating feedback into system improvements, and maintaining documentation of stakeholder engagement activities. The organisation must consider diverse perspectives, ensure feedback processes are accessible to all affected communities, and demonstrate how stakeholder input influences system development and operation. Regular reporting to stakeholders about system performance, impacts, and improvements must be maintained.

Transparency & Communication in AI systems goes far beyond merely listing features or providing usage documentation. Because AI decisions often involve probability-based reasoning and adaptive learning, organisations need to communicate not only what their systems do, but how and why they operate as they do. Frameworks such as ISO 42001, ISO 27001, ISO 27701, the EU AI Act, NIST AI RMF, and SOC2 emphasise that transparent communication fosters trust, clarifies responsibilities, and ensures that diverse stakeholders—from end users to regulators—can understand AI systems’ capabilities, limitations, and potential impacts. By establishing AI system transparency (CO-1) and engaging in active stakeholder feedback (CO-2), organisations can build an environment of trust and mutual understanding around their AI deployments.

CO-1: AI System Transparency

Effective transparency demands that organisations clearly mark AI-generated content, disclose automated decision-making, and provide accessible explanations of system functionality and performance. ISO 42001 (7.4, A.6.2.7, A.8.2, A.9.3, A.9.4) calls for documentation that goes beyond technical specs to address how AI decisions may affect users and affected communities. ISO 27001 (7.4, A.6.4) provides a baseline for communicating security controls, while ISO 27701 (A.7.3.2, A.7.3.3, A.7.3.10, B.8.2.3) extends transparency to privacy considerations, requiring organisations to inform individuals about how personal data is used in AI processes. The EU AI Act (13.1–13.3, 15.3, 50.1–50.5, 86.1–86.3) underscores the need for clear labelling of AI interactions and detailed explanations of AI decisions in high-risk contexts. NIST AI RMF (Map 1.2, Measure 4.1) advocates for “layered transparency,” providing different levels of detail for various stakeholders—ranging from high-level summaries for end users to in-depth technical data for auditors. Meanwhile, SOC2 (CC2.2) ensures that all transparency measures are documented and auditable, making it possible to verify that users receive meaningful disclosures. In production, satisfying this control might involve at a minimal level offering user-friendly disclaimers, providing accessible documentation, and delivering deeper explanatory materials for those who need or request them.

CO-2: Stakeholder Engagement and Feedback

Transparency is not just about broadcasting information; it also involves actively listening to and incorporating feedback from those impacted by AI systems. ISO 42001 (A.3.3, A.8.3, A.8.5, A.10.4) stresses the importance of continuous dialogue with users and communities, ensuring that organisations remain aware of real-world impacts and can adapt accordingly. ISO 27001 (4.2, A.6.4) similarly calls for stakeholder involvement in security-related decisions, while ISO 27701 (A.7.3.4, A.7.3.5, A.7.3.9) extends these requirements to privacy, mandating that feedback loops address data handling concerns. Under the EU AI Act (4.1, 26.7, 26.11), stakeholder engagement becomes critical for high-risk AI systems, where the consequences of AI decisions may affect individual rights and broader societal values. NIST AI RMF (Govern 5.1, Govern 5.2, Map 5.2, Measure 3.3, Measure 4.3) underscores how ongoing consultation and feedback can help refine AI systems over time, while SOC2 (CC2.2) highlights the need to document stakeholder interactions, ensuring that input is not only collected but also integrated into system improvements. In practice, this might include user surveys, open forums, or collaborative design sessions, all with formal processes for tracking and acting on stakeholder suggestions and concerns.

Create Your Own Visual Control Maps

To help you implement these concepts in your organisation, I've created a GitHub repository with tools that let you generate your own AI governance control maps and chord diagrams. The repository includes the complete mapping of all 44 master controls to major frameworks, and Python code to generate your own custom chord diagrams.

Access the tools at:

Doing AI Governance - Code & Resources

These resources are provided under an open-source licence and allow you to visualise relationships between controls and frameworks, identify gaps in your governance approach, and create documentation tailored to your own organisation's unique AI governance needs. I hope you enjoy using it and welcome any feedback or contributions to it’s improvement.

That’s it - 12 master control domains, 44 controls. As we conclude our tour of the control domains for AI governance, I’ve laid out a comprehensive framework that transforms abstract principles into practical controls. From executive accountability and risk management through to transparency and third-party oversight, these domains provide a structured approach to responsible AI development that aligns with major frameworks and regulations while remaining practical to implement.

But knowing what controls you need is just the beginning. The next challenge lies in translating these controls into organisational policies, procedures, and practices that work in your specific context. That's why my upcoming articles will focus on building the core governance documents that bring these controls to life:

First, I'll provide detailed guidance on creating an effective AI Policy – the foundational document that establishes your organisation's approach to responsible AI development and sets clear expectations for all stakeholders. I’ll go through a template policy that covers just the essentials necessary for real-world AI governance - balancing innovation with responsibility, addressing regulatory requirements, and providing clear guidance for your teams.

Next, we'll tackle the AI Risk Management Framework – the structured approach that helps you systematically identify, assess, and mitigate risks throughout the AI lifecycle. I'll share practical approaches that can help you identify and manage real-world risks. This is a big topic so I have a few articles in mind, covering a framework for assessment, methods for risk identification and selection of appropriate risk treatments.

Then finally, we'll explore Model and Data Lifecycle Management – with guidance on establishing governance checkpoints, documentation requirements, and quality controls that ensure your AI systems remain aligned with your governance principles from initial data collection through model retirement.

I hope you’ll find these upcoming articles go far beyond theory to practical implementation building governance documents and mechanisms that work in real-world settings. I encourage you to subscribe to ensure you receive these resources as they become available.

https://fpf.org/wp-content/uploads/2023/11/OT-FPF-comformity-assessments-ebook_update2.pdf

I have gone through all the articles—huge congrats! As Nick Malter pointed out, the GitHub link does not work (I think I have found the correct link: https://github.com/The-Company-Ethos/doing-ai-governance). It would be great to see how these controls are reflected in roles. Maybe as an additional article idea: In your experience, which teams, departments, or specific roles are responsible and accountable for these domains and controls? What is the specific role of the Responsible AI Officer in this leadership dance? Again thank you for creating such a value for the field!

This is really very relevant information presented in a perfectly structured way. Companies can derive direct measures from this with regard to their organization and processes. Thanks!